Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Multiple Linear Regression | A Quick Guide (Examples)

Published on February 20, 2020 by Rebecca Bevans . Revised on June 22, 2023.

Regression models are used to describe relationships between variables by fitting a line to the observed data. Regression allows you to estimate how a dependent variable changes as the independent variable(s) change.

Multiple linear regression is used to estimate the relationship between two or more independent variables and one dependent variable . You can use multiple linear regression when you want to know:

- How strong the relationship is between two or more independent variables and one dependent variable (e.g. how rainfall, temperature, and amount of fertilizer added affect crop growth).

- The value of the dependent variable at a certain value of the independent variables (e.g. the expected yield of a crop at certain levels of rainfall, temperature, and fertilizer addition).

Table of contents

Assumptions of multiple linear regression, how to perform a multiple linear regression, interpreting the results, presenting the results, other interesting articles, frequently asked questions about multiple linear regression.

Multiple linear regression makes all of the same assumptions as simple linear regression :

Homogeneity of variance (homoscedasticity) : the size of the error in our prediction doesn’t change significantly across the values of the independent variable.

Independence of observations : the observations in the dataset were collected using statistically valid sampling methods , and there are no hidden relationships among variables.

In multiple linear regression, it is possible that some of the independent variables are actually correlated with one another, so it is important to check these before developing the regression model. If two independent variables are too highly correlated (r2 > ~0.6), then only one of them should be used in the regression model.

Normality : The data follows a normal distribution .

Linearity : the line of best fit through the data points is a straight line, rather than a curve or some sort of grouping factor.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Multiple linear regression formula

The formula for a multiple linear regression is:

- … = do the same for however many independent variables you are testing

To find the best-fit line for each independent variable, multiple linear regression calculates three things:

- The regression coefficients that lead to the smallest overall model error.

- The t statistic of the overall model.

- The associated p value (how likely it is that the t statistic would have occurred by chance if the null hypothesis of no relationship between the independent and dependent variables was true).

It then calculates the t statistic and p value for each regression coefficient in the model.

Multiple linear regression in R

While it is possible to do multiple linear regression by hand, it is much more commonly done via statistical software. We are going to use R for our examples because it is free, powerful, and widely available. Download the sample dataset to try it yourself.

Dataset for multiple linear regression (.csv)

Load the heart.data dataset into your R environment and run the following code:

This code takes the data set heart.data and calculates the effect that the independent variables biking and smoking have on the dependent variable heart disease using the equation for the linear model: lm() .

Learn more by following the full step-by-step guide to linear regression in R .

To view the results of the model, you can use the summary() function:

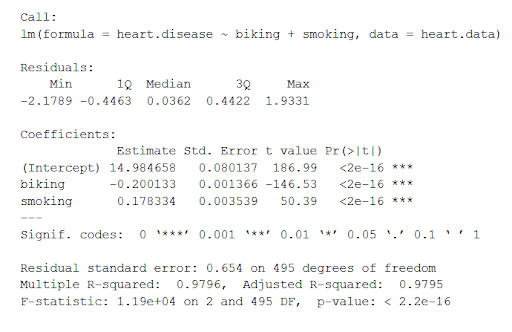

This function takes the most important parameters from the linear model and puts them into a table that looks like this:

The summary first prints out the formula (‘Call’), then the model residuals (‘Residuals’). If the residuals are roughly centered around zero and with similar spread on either side, as these do ( median 0.03, and min and max around -2 and 2) then the model probably fits the assumption of heteroscedasticity.

Next are the regression coefficients of the model (‘Coefficients’). Row 1 of the coefficients table is labeled (Intercept) – this is the y-intercept of the regression equation. It’s helpful to know the estimated intercept in order to plug it into the regression equation and predict values of the dependent variable:

The most important things to note in this output table are the next two tables – the estimates for the independent variables.

The Estimate column is the estimated effect , also called the regression coefficient or r 2 value. The estimates in the table tell us that for every one percent increase in biking to work there is an associated 0.2 percent decrease in heart disease, and that for every one percent increase in smoking there is an associated .17 percent increase in heart disease.

The Std.error column displays the standard error of the estimate. This number shows how much variation there is around the estimates of the regression coefficient.

The t value column displays the test statistic . Unless otherwise specified, the test statistic used in linear regression is the t value from a two-sided t test . The larger the test statistic, the less likely it is that the results occurred by chance.

The Pr( > | t | ) column shows the p value . This shows how likely the calculated t value would have occurred by chance if the null hypothesis of no effect of the parameter were true.

Because these values are so low ( p < 0.001 in both cases), we can reject the null hypothesis and conclude that both biking to work and smoking both likely influence rates of heart disease.

When reporting your results, include the estimated effect (i.e. the regression coefficient), the standard error of the estimate, and the p value. You should also interpret your numbers to make it clear to your readers what the regression coefficient means.

Visualizing the results in a graph

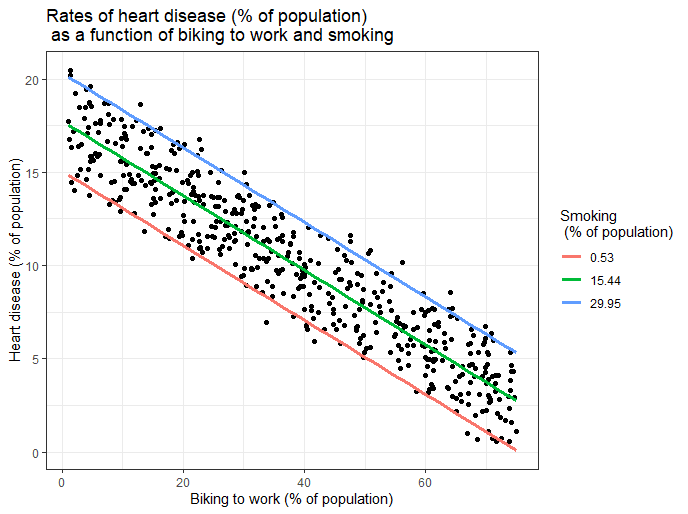

It can also be helpful to include a graph with your results. Multiple linear regression is somewhat more complicated than simple linear regression, because there are more parameters than will fit on a two-dimensional plot.

However, there are ways to display your results that include the effects of multiple independent variables on the dependent variable, even though only one independent variable can actually be plotted on the x-axis.

Here, we have calculated the predicted values of the dependent variable (heart disease) across the full range of observed values for the percentage of people biking to work.

To include the effect of smoking on the independent variable, we calculated these predicted values while holding smoking constant at the minimum, mean , and maximum observed rates of smoking.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square test of independence

- Statistical power

- Descriptive statistics

- Degrees of freedom

- Pearson correlation

- Null hypothesis

Methodology

- Double-blind study

- Case-control study

- Research ethics

- Data collection

- Hypothesis testing

- Structured interviews

Research bias

- Hawthorne effect

- Unconscious bias

- Recall bias

- Halo effect

- Self-serving bias

- Information bias

A regression model is a statistical model that estimates the relationship between one dependent variable and one or more independent variables using a line (or a plane in the case of two or more independent variables).

A regression model can be used when the dependent variable is quantitative, except in the case of logistic regression, where the dependent variable is binary.

Multiple linear regression is a regression model that estimates the relationship between a quantitative dependent variable and two or more independent variables using a straight line.

Linear regression most often uses mean-square error (MSE) to calculate the error of the model. MSE is calculated by:

- measuring the distance of the observed y-values from the predicted y-values at each value of x;

- squaring each of these distances;

- calculating the mean of each of the squared distances.

Linear regression fits a line to the data by finding the regression coefficient that results in the smallest MSE.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Multiple Linear Regression | A Quick Guide (Examples). Scribbr. Retrieved September 5, 2024, from https://www.scribbr.com/statistics/multiple-linear-regression/

Is this article helpful?

Rebecca Bevans

Other students also liked, simple linear regression | an easy introduction & examples, an introduction to t tests | definitions, formula and examples, types of variables in research & statistics | examples, what is your plagiarism score.

Multiple linear regression

Multiple linear regression #.

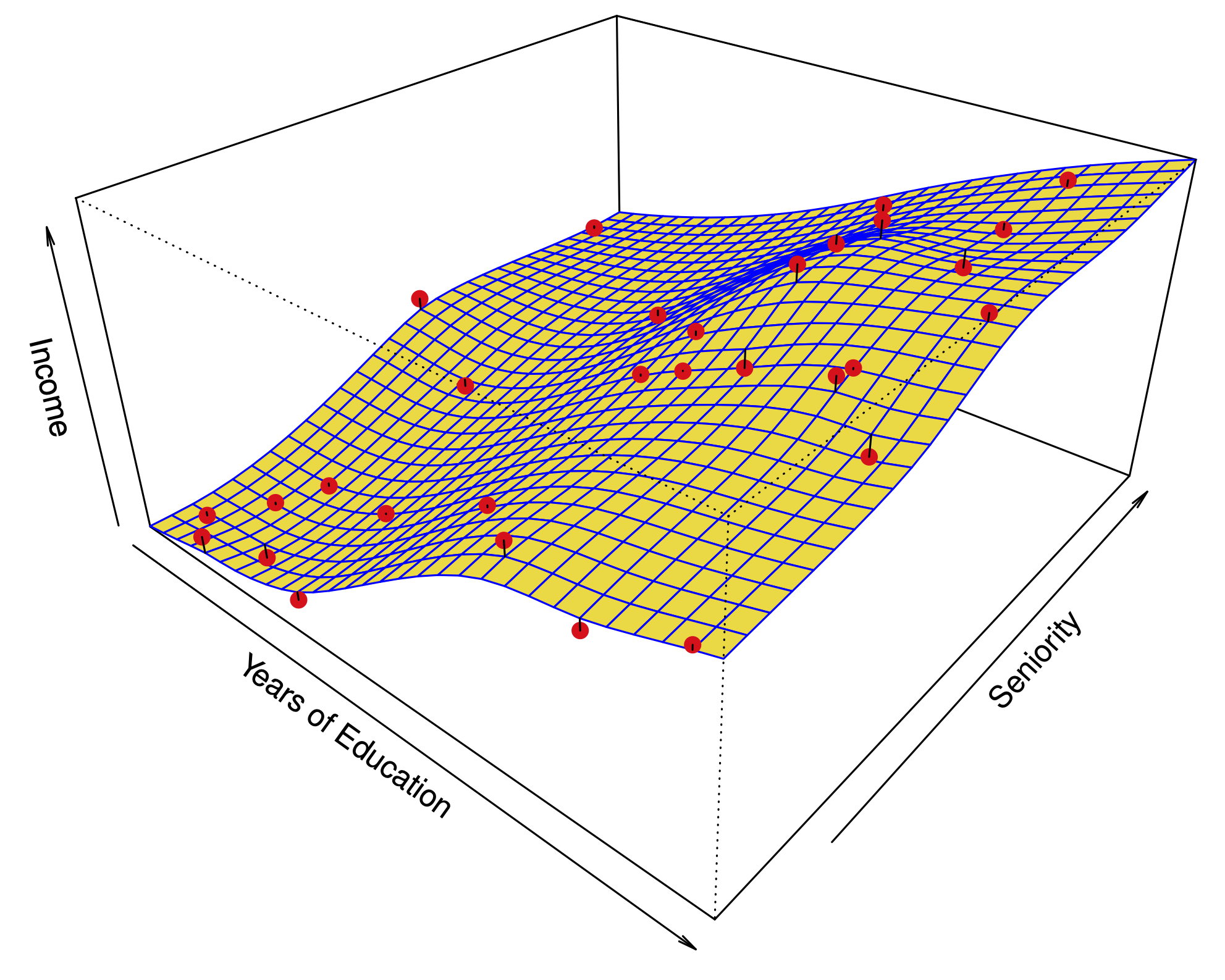

Fig. 11 Multiple linear regression #

Errors: \(\varepsilon_i \sim N(0,\sigma^2)\quad \text{i.i.d.}\)

Fit: the estimates \(\hat\beta_0\) and \(\hat\beta_1\) are chosen to minimize the residual sum of squares (RSS):

Matrix notation: with \(\beta=(\beta_0,\dots,\beta_p)\) and \({X}\) our usual data matrix with an extra column of ones on the left to account for the intercept, we can write

Multiple linear regression answers several questions #

Is at least one of the variables \(X_i\) useful for predicting the outcome \(Y\) ?

Which subset of the predictors is most important?

How good is a linear model for these data?

Given a set of predictor values, what is a likely value for \(Y\) , and how accurate is this prediction?

The estimates \(\hat\beta\) #

Our goal again is to minimize the RSS: $ \( \begin{aligned} \text{RSS}(\beta) &= \sum_{i=1}^n (y_i -\hat y_i(\beta))^2 \\ & = \sum_{i=1}^n (y_i - \beta_0- \beta_1 x_{i,1}-\dots-\beta_p x_{i,p})^2 \\ &= \|Y-X\beta\|^2_2 \end{aligned} \) $

One can show that this is minimized by the vector \(\hat\beta\) : $ \(\hat\beta = ({X}^T{X})^{-1}{X}^T{y}.\) $

We usually write \(RSS=RSS(\hat{\beta})\) for the minimized RSS.

Which variables are important? #

Consider the hypothesis: \(H_0:\) the last \(q\) predictors have no relation with \(Y\) .

Based on our model: \(H_0:\beta_{p-q+1}=\beta_{p-q+2}=\dots=\beta_p=0.\)

Let \(\text{RSS}_0\) be the minimized residual sum of squares for the model which excludes these variables.

The \(F\) -statistic is defined by: $ \(F = \frac{(\text{RSS}_0-\text{RSS})/q}{\text{RSS}/(n-p-1)}.\) $

Under the null hypothesis (of our model), this has an \(F\) -distribution.

Example: If \(q=p\) , we test whether any of the variables is important. $ \(\text{RSS}_0 = \sum_{i=1}^n(y_i-\overline y)^2 \) $

| Res.Df | RSS | Df | Sum of Sq | F | Pr(>F) |

|---|---|---|---|---|---|

| <dbl> | <dbl> | <dbl> | <dbl> | <dbl> | <dbl> |

| 494 | 11336.29 | NA | NA | NA | NA |

| 492 | 11078.78 | 2 | 257.5076 | 5.717853 | 0.003509036 |

The \(t\) -statistic associated to the \(i\) th predictor is the square root of the \(F\) -statistic for the null hypothesis which sets only \(\beta_i=0\) .

A low \(p\) -value indicates that the predictor is important.

Warning: If there are many predictors, even under the null hypothesis, some of the \(t\) -tests will have low p-values even when the model has no explanatory power.

How many variables are important? #

When we select a subset of the predictors, we have \(2^p\) choices.

A way to simplify the choice is to define a range of models with an increasing number of variables, then select the best.

Forward selection: Starting from a null model, include variables one at a time, minimizing the RSS at each step.

Backward selection: Starting from the full model, eliminate variables one at a time, choosing the one with the largest p-value at each step.

Mixed selection: Starting from some model, include variables one at a time, minimizing the RSS at each step. If the p-value for some variable goes beyond a threshold, eliminate that variable.

Choosing one model in the range produced is a form of tuning . This tuning can invalidate some of our methods like hypothesis tests and confidence intervals…

How good are the predictions? #

The function predict in R outputs predictions and confidence intervals from a linear model:

| fit | lwr | upr |

|---|---|---|

| 9.409426 | 8.722696 | 10.09616 |

| 14.163090 | 13.708423 | 14.61776 |

| 18.916754 | 18.206189 | 19.62732 |

Prediction intervals reflect uncertainty on \(\hat\beta\) and the irreducible error \(\varepsilon\) as well.

| fit | lwr | upr |

|---|---|---|

| 9.409426 | 2.946709 | 15.87214 |

| 14.163090 | 7.720898 | 20.60528 |

| 18.916754 | 12.451461 | 25.38205 |

These functions rely on our linear regression model $ \( Y = X\beta + \epsilon. \) $

Dealing with categorical or qualitative predictors #

For each qualitative predictor, e.g. Region :

Choose a baseline category, e.g. East

For every other category, define a new predictor:

\(X_\text{South}\) is 1 if the person is from the South region and 0 otherwise

\(X_\text{West}\) is 1 if the person is from the West region and 0 otherwise.

The model will be: $ \(Y = \beta_0 + \beta_1 X_1 +\dots +\beta_7 X_7 + \color{Red}{\beta_\text{South}} X_\text{South} + \beta_\text{West} X_\text{West} +\varepsilon.\) $

The parameter \(\color{Red}{\beta_\text{South}}\) is the relative effect on Balance (our \(Y\) ) for being from the South compared to the baseline category (East).

The model fit and predictions are independent of the choice of the baseline category.

However, hypothesis tests derived from these variables are affected by the choice.

Solution: To check whether region is important, use an \(F\) -test for the hypothesis \(\beta_\text{South}=\beta_\text{West}=0\) by dropping Region from the model. This does not depend on the coding.

Note that there are other ways to encode qualitative predictors produce the same fit \(\hat f\) , but the coefficients have different interpretations.

So far, we have:

Defined Multiple Linear Regression

Discussed how to test the importance of variables.

Described one approach to choose a subset of variables.

Explained how to code qualitative variables.

Now, how do we evaluate model fit? Is the linear model any good? What can go wrong?

How good is the fit? #

To assess the fit, we focus on the residuals $ \( e = Y - \hat{Y} \) $

The RSS always decreases as we add more variables.

The residual standard error (RSE) corrects this: $ \(\text{RSE} = \sqrt{\frac{1}{n-p-1}\text{RSS}}.\) $

Fig. 12 Residuals #

Visualizing the residuals can reveal phenomena that are not accounted for by the model; eg. synergies or interactions:

Potential issues in linear regression #

Interactions between predictors

Non-linear relationships

Correlation of error terms

Non-constant variance of error (heteroskedasticity)

High leverage points

Collinearity

Interactions between predictors #

Linear regression has an additive assumption: $ \(\mathtt{sales} = \beta_0 + \beta_1\times\mathtt{tv}+ \beta_2\times\mathtt{radio}+\varepsilon\) $

i.e. An increase of 100 USD dollars in TV ads causes a fixed increase of \(100 \beta_2\) USD in sales on average, regardless of how much you spend on radio ads.

We saw that in Fig 3.5 above. If we visualize the fit and the observed points, we see they are not evenly scattered around the plane. This could be caused by an interaction.

One way to deal with this is to include multiplicative variables in the model:

The interaction variable tv \(\cdot\) radio is high when both tv and radio are high.

R makes it easy to include interaction variables in the model:

Non-linearities #

Fig. 13 A nonlinear fit might be better here. #

Example: Auto dataset.

A scatterplot between a predictor and the response may reveal a non-linear relationship.

Solution: include polynomial terms in the model.

Could use other functions besides polynomials…

Fig. 14 Residuals for Auto data #

In 2 or 3 dimensions, this is easy to visualize. What do we do when we have too many predictors?

Correlation of error terms #

We assumed that the errors for each sample are independent:

What if this breaks down?

The main effect is that this invalidates any assertions about Standard Errors, confidence intervals, and hypothesis tests…

Example : Suppose that by accident, we duplicate the data (we use each sample twice). Then, the standard errors would be artificially smaller by a factor of \(\sqrt{2}\) .

When could this happen in real life:

Time series: Each sample corresponds to a different point in time. The errors for samples that are close in time are correlated.

Spatial data: Each sample corresponds to a different location in space.

Grouped data: Imagine a study on predicting height from weight at birth. If some of the subjects in the study are in the same family, their shared environment could make them deviate from \(f(x)\) in similar ways.

Correlated errors #

Simulations of time series with increasing correlations between \(\varepsilon_i\)

Non-constant variance of error (heteroskedasticity) #

The variance of the error depends on some characteristics of the input features.

To diagnose this, we can plot residuals vs. fitted values:

If the trend in variance is relatively simple, we can transform the response using a logarithm, for example.

Outliers from a model are points with very high errors.

While they may not affect the fit, they might affect our assessment of model quality.

Possible solutions: #

If we believe an outlier is due to an error in data collection, we can remove it.

An outlier might be evidence of a missing predictor, or the need to specify a more complex model.

High leverage points #

Some samples with extreme inputs have an outsized effect on \(\hat \beta\) .

This can be measured with the leverage statistic or self influence :

Studentized residuals #

The residual \(e_i = y_i - \hat y_i\) is an estimate for the noise \(\epsilon_i\) .

The standard error of \(\hat \epsilon_i\) is \(\sigma \sqrt{1-h_{ii}}\) .

A studentized residual is \(\hat \epsilon_i\) divided by its standard error (with appropriate estimate of \(\sigma\) )

When model is correct, it follows a Student-t distribution with \(n-p-2\) degrees of freedom.

Collinearity #

Two predictors are collinear if one explains the other well:

Problem: The coefficients become unidentifiable .

Consider the extreme case of using two identical predictors limit : $ \( \begin{aligned} \mathtt{balance} &= \beta_0 + \beta_1\times\mathtt{limit} + \beta_2\times\mathtt{limit} + \epsilon \\ & = \beta_0 + (\beta_1+100)\times\mathtt{limit} + (\beta_2-100)\times\mathtt{limit} + \epsilon \end{aligned} \) $

For every \((\beta_0,\beta_1,\beta_2)\) the fit at \((\beta_0,\beta_1,\beta_2)\) is just as good as at \((\beta_0,\beta_1+100,\beta_2-100)\) .

If 2 variables are collinear, we can easily diagnose this using their correlation.

A group of \(q\) variables is multilinear if these variables “contain less information” than \(q\) independent variables.

Pairwise correlations may not reveal multilinear variables.

The Variance Inflation Factor (VIF) measures how predictable it is given the other variables, a proxy for how necessary a variable is:

Above, \(R^2_{X_j|X_{-j}}\) is the \(R^2\) statistic for Multiple Linear regression of the predictor \(X_j\) onto the remaining predictors.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Hippokratia

- v.14(Suppl 1); 2010 Dec

Introduction to Multivariate Regression Analysis

Statistics are used in medicine for data description and inference. Inferential statistics are used to answer questions about the data, to test hypotheses (formulating the alternative or null hypotheses), to generate a measure of effect, typically a ratio of rates or risks, to describe associations (correlations) or to model relationships (regression) within the data and, in many other functions. Usually point estimates are the measures of associations or of the magnitude of effects. Confounding, measurement errors, selection bias and random errors make unlikely the point estimates to equal the true ones. In the estimation process, the random error is not avoidable. One way to account for is to compute p-values for a range of possible parameter values (including the null). The range of values, for which the p-value exceeds a specified alpha level (typically 0.05) is called confidence interval. An interval estimation procedure will, in 95% of repetitions (identical studies in all respects except for random error), produce limits that contain the true parameters. It is argued that the question if the pair of limits produced from a study contains the true parameter could not be answered by the ordinary (frequentist) theory of confidence intervals 1 . Frequentist approaches derive estimates by using probabilities of data (either p-values or likelihoods) as measures of compatibility between data and hypotheses, or as measures of the relative support that data provide hypotheses. Another approach, the Bayesian, uses data to improve existing (prior) estimates in light of new data. Proper use of any approach requires careful interpretation of statistics 1 , 2 .

The goal in any data analysis is to extract from raw information the accurate estimation. One of the most important and common question concerning if there is statistical relationship between a response variable (Y) and explanatory variables (Xi). An option to answer this question is to employ regression analysis in order to model its relationship. There are various types of regression analysis. The type of the regression model depends on the type of the distribution of Y; if it is continuous and approximately normal we use linear regression model; if dichotomous we use logistic regression; if Poisson or multinomial we use log-linear analysis; if time-to-event data in the presence of censored cases (survival-type) we use Cox regression as a method for modeling. By modeling we try to predict the outcome (Y) based on values of a set of predictor variables (Xi). These methods allow us to assess the impact of multiple variables (covariates and factors) in the same model 3 , 4 .

In this article we focus in linear regression. Linear regression is the procedure that estimates the coefficients of the linear equation, involving one or more independent variables that best predict the value of the dependent variable which should be quantitative. Logistic regression is similar to a linear regression but is suited to models where the dependent variable is dichotomous. Logistic regression coefficients can be used to estimate odds ratios for each of the independent variables in the model.

Linear equation

In most statistical packages, a curve estimation procedure produces curve estimation regression statistics and related plots for many different models (linear, logarithmic, inverse, quadratic, cubic, power, S-curve, logistic, exponential etc.). It is essential to plot the data in order to determine which model to use for each depedent variable. If the variables appear to be related linearly, a simple linear regression model can be used but in the case that the variables are not linearly related, data transformation might help. If the transformation does not help then a more complicated model may be needed. It is strongly advised to view early a scatterplot of your data; if the plot resembles a mathematical function you recognize, fit the data to that type of model. For example, if the data resemble an exponential function, an exponential model is to be used. Alternatively, if it is not obvious which model best fits the data, an option is to try several models and select among them. It is strongly recommended to screen the data graphically (e.g. by a scatterplot) in order to determine how the independent and dependent variables are related (linearly, exponentially etc.) 4 – 6 .

The most appropriate model could be a straight line, a higher degree polynomial, a logarithmic or exponential. The strategies to find an appropriate model include the forward method in which we start by assuming the very simple model i.e. a straight line (Y = a + bX or Y = b 0 + b 1 X ). Then we find the best estimate of the assumed model. If this model does not fit the data satisfactory, then we assume a more complicated model e.g. a 2nd degree polynomial (Y=a+bX+cX 2 ) and so on. In a backward method we assume a complicated model e.g. a high degree polynomial, we fit the model and we try to simplify it. We might also use a model suggested by theory or experience. Often a straight line relationship fits the data satisfactory and this is the case of simple linear regression. The simplest case of linear regression analysis is that with one predictor variable 6 , 7 .

Linear regression equation

The purpose of regression is to predict Y on the basis of X or to describe how Y depends on X (regression line or curve)

The Xi (X 1 , X 2 , , X k ) is defined as "predictor", "explanatory" or "independent" variable, while Y is defined as "dependent", "response" or "outcome" variable.

Assuming a linear relation in population, mean of Y for given X equals α+βX i.e. the "population regression line".

If Y = a + bX is the estimated line, then the fitted

Ŷi = a + bXi is called the fitted (or predicted) value, and Yi Ŷi is called the residual.

The estimated regression line is determined in such way that (residuals) 2 to be the minimal i.e. the standard deviation of the residuals to be minimized (residuals are on average zero). This is called the "least squares" method. In the equation

b is the slope (the average increase of outcome per unit increase of predictor)

a is the intercept (often has no direct practical meaning)

A more detailed (higher precision of the estimates a and b) regression equation line can also be written as

Further inference about regression line could be made by the estimation of confidence interval (95%CI for the slope b). The calculation is based on the standard error of b:

so, 95% CI for β is b ± t0.975*se(b) [t-distr. with df = n-2]

and the test for H0: β=0, is t = b / se(b) [p-value derived from t-distr. with df = n-2].

If the p value lies above 0.05 then the null hypothesis is not rejected which means that a straight line model in X does not help predicting Y. There is the possibility that the straight line model holds (slope = 0) or there is a curved relation with zero linear component. On the other hand, if the null hypothesis is rejected either the straight line model holds or in a curved relationship the straight line model helps, but is not the best model. Of course there is the possibility for a type II or type I error in the first and second option, respectively. The standard deviation of residual (σ res ) is estimated by

The standard deviation of residual (σ res ) characterizes the variability around the regression line i.e. the smaller the σ res , the better the fit. It has a number of degrees of freedom. This is the number to divide by in order to have an unbiased estimate of the variance. In this case df = n-2, because two parameters, α and β, are estimated 7 .

Multiple linear regression analysis

As an example in a sample of 50 individuals we measured: Y = toluene personal exposure concentration (a widespread aromatic hydrocarbon); X1 = hours spent outdoors; X2 = wind speed (m/sec); X3 = toluene home levels. Y is the continuous response variable ("dependent") while X1, X2, , Xp as the predictor variables ("independent") [7]. Usually the questions of interest are how to predict Y on the basis of the X's and what is the "independent" influence of wind speed, i.e. corrected for home levels and other related variables? These questions can in principle be answered by multiple linear regression analysis.

In the multiple linear regression model, Y has normal distribution with mean

The model parameters β 0 + β 1 + +β ρ and σ must be estimated from data.

β 0 = intercept

β 1 β ρ = regression coefficients

σ = σ res = residual standard deviation

Interpretation of regression coefficients

In the equation Y = β 0 + β 1 1 + +βρXρ

β 1 equals the mean increase in Y per unit increase in Xi , while other Xi's are kept fixed. In other words βi is influence of Xi corrected (adjusted) for the other X's. The estimation method follows the least squares criterion.

If b 0 , b 1 , , bρ are the estimates of β 0 , β 1 , , βρ then the "fitted" value of Y is

In our example, the statistical packages give the following estimates or regression coefficients (bi) and standard errors (se) for toluene personal exposure levels.

Then the regression equation for toluene personal exposure levels would be:

The estimated coefficient for time spent outdoors (0.582) means that the estimated mean increase in toluene personal levels is 0.582 g/m 3 if time spent outdoors increases 1 hour, while home levels and wind speed remain constant. More precisely one could say that individuals differing one hour in the time that spent outdoors, but having the same values on the other predictors, will have a mean difference in toluene xposure levels equal to 0.582 µg/m 3 8 .

Be aware that this interpretation does not imply any causal relation.

Confidence interval (CI) and test for regression coefficients

95% CI for i is given by bi ± t0.975*se(bi) for df= n-1-p (df: degrees of freedom)

In our example that means that the 95% CI for the coefficient of time spent outdoors is 95%CI: - 0.19 to 0.49

As in example if we test the H0: β humidity = 0 and find P = 0.40, which is not significant, we assumed that the association between between toluene personal exposure and humidity could be explained by the correlation between humididty and wind speed 8 .

In order to estimate the standard deviation of the residual (Y Yfit), i.e. the estimated standard deviation of a given set of variable values in a population sample, we have to estimate σ

The number of degrees of freedom is df = n (p + 1), since p + 1 parameters are estimated.

The ANOVA table gives the total variability in Y which can be partitioned in a part due to regression and a part due to residual variation:

With degrees of freedom (n 1) = p + (n p 1)

In statistical packages the ANOVA table in which the partition is given usually has the following format [6]:

SS: "sums of squares"; df: Degrees of freedom; MS: "mean squares" (SS/dfs); F: F statistics (see below)

As a measure of the strength of the linear relation one can use R. R is called the multiple correlation coefficient between Y, predictors (X1, Xp ) and Yfit and R square is the proportion of total variation explained by regression (R 2 =SSreg / SStot).

Test on overall or reduced model

In our example Tpers = β 0 + β 1 time outdoors + β 2 Thome +β 3 wind speed + residual

The null hypothesis (H 0 ) is that there is no regression overall i.e. β 1 = β 2 =+βρ = 0

The test is based on the proportion of the SS explained by the regression relative to the residual SS. The test statistic (F= MSreg / MSres) has F-distribution with df1 = p and df2 = n p 1 (F- distribution table). In our example F= 5.49 (P<0.01)

If now we want to test the hypothesis Ho: β 1 = β 2 = β 5 = 0 (k = 3)

In general k of p regression coefficients are set to zero under H0. The model that is valid if H 0 =0 is true is called the "reduced model". The Idea is to compare the explained variability of the model at hand with that of the reduced model.

The test statistic (F):

follows a F-distribution with df 1 = k and df 2 = n p 1.

If one or two variables are left out and we calculate SS reg (the statistical package does) and we find that the test statistic for F lies between 0.05 < P < 0.10, that means that there is some evidence, although not strong, that these variables together, independently of the others, contribute to the prediction of the outcome.

Assumptions

If a linear model is used, the following assumptions should be met. For each value of the independent variable, the distribution of the dependent variable must be normal. The variance of the distribution of the dependent variable should be constant for all values of the independent variable. The relationship between the dependent variable and the independent variables should be linear, and all observations should be independent. So the assumptions are: independence; linearity; normality; homoscedasticity. In other words the residuals of a good model should be normally and randomly distributed i.e. the unknown does not depend on X ("homoscedasticity") 2 , 4 , 6 , 9 .

Checking for violations of model assumptions

To check model assumptions we used residual analysis. There are several kinds of residuals most commonly used are the standardized residuals (ZRESID) and the studentized residuals (SRESID) [6]. If the model is correct, the residuals should have a normal distribution with mean zero and constant sd (i.e. not depending on X). In order to check this we can plot residuals against X. If the variation alters with increasing X, then there is violation of homoscedasticity. We can also use the Durbin-Watson test for serial correlation of the residuals and casewise diagnostics for the cases meeting the selection criterion (outliers above n standard deviations). The residuals are (zero mean) independent, normally distributed with constant standard deviation (homogeneity of variances) 4 , 6 .

To discover deviations form linearity and homogeneity of variables we can plot residuals against each predictor or against predicted values. Alternatively by using the PARTIAL plot we can assess linearity of a predictor variable. The partial plot for a predictor X 1 is a plot of residuals of Y regressed on other Xs and against residuals of Xi regressed on other X's. The plot should be linear. To check the normality of residuals we can use an histogram (with normal curve) or a normal probability plot 6 , 7 .

The goodness-of-fit of the model is assessed by studying the behavior of the residuals, looking for "special observations / individuals" like outliers, observations with high "leverage" and influential points. Observations deserving extra attention are outliers i.e. observations with unusually large residual; high leverage points: unusual x - pattern, i.e. outliers in predictor space; influential points: individuals with high influence on estimate or standard error of one or more β's. An observation could be all three. It is recommended to inspect individuals with large residual, for outliers; to use distances for high leverage points i.e. measures to identify cases with unusual combinations of values for the independent variables and cases that may have a large impact on the regression model. For influential points use influence statistics i.e. the change in the regression coefficients (DfBeta(s)) and predicted values (DfFit) that results from the exclusion of a particular case. Overall measure for influence on all β's jointly is "Cook's distance" (COOK). Analogously for standard errors overall measure is COVRATIO 6 .

Deviations from model assumptions

We can use some tips to correct some deviation from model assumptions. In case of curvilinearity in one or more plots we could add quadratic term(s). In case of non homogeneity of residual sd, we can try some transformation: log Y if Sres is proportional to predicted Y; square root of Y if Y distribution is Poisson-like; 1/Y if Sres 2 is proportional to predicted Y; Y 2 if Sres 2 decreases with Y. If linearity and homogeneity hold then non-normality does not matter if the sample size is big enough (n≥50- 100). If linearity but not homogeneity hold then estimates of β's are correct, but not the standard errors. They can be corrected by computing the "robust" se's (sandwich, Huber's estimate) 4 , 6 , 9 .

Selection methods for Linear Regression modeling

There are various selection methods for linear regression modeling in order to specify how independent variables are entered into the analysis. By using different methods, a variety of regression models from the same set of variables could be constructed. Forward variable selection enters the variables in the block one at a time based on entry criteria. Backward variable elimination enters all of the variables in the block in a single step and then removes them one at a time based on removal criteria. Stepwise variable entry and removal examines the variables in the block at each step for entry or removal. All variables must pass the tolerance criterion to be entered in the equation, regardless of the entry method specified. A variable is not entered if it would cause the tolerance of another variable already in the model to drop below the tolerance criterion 6 . In a model fitting the variables entered and removed from the model and various goodness-of-fit statistics are displayed such as R2, R squared change, standard error of the estimate, and an analysis-of-variance table.

Relative issues

Binary logistic regression models can be fitted using either the logistic regression procedure or the multinomial logistic regression procedure. An important theoretical distinction is that the logistic regression procedure produces all statistics and tests using data at the individual cases while the multinomial logistic regression procedure internally aggregates cases to form subpopulations with identical covariate patterns for the predictors based on these subpopulations. If all predictors are categorical or any continuous predictors take on only a limited number of values the mutinomial procedure is preferred. As previously mentioned, use the Scatterplot procedure to screen data for multicollinearity. As with other forms of regression, multicollinearity among the predictors can lead to biased estimates and inflated standard errors. If all of your predictor variables are categorical, you can also use the loglinear procedure.

In order to explore correlation between variables, Pearson or Spearman correlation for a pair of variables r (Xi, Xj) is commonly used. For each pair of variables (Xi, Xj) Pearson's correlation coefficient (r) can be computed. Pearsons r (Xi; Xj) is a measure of linear association between two (ideally normally distributed) variables. R 2 is the proportion of total variation of the one explained by the other (R 2 = b * Sx/Sy), identical with regression. Each correlation coefficient gives measure for association between two variables without taking other variables into account. But there are several useful correlation concepts involving more variables. The partial correlation coefficient between Xi and Xj, adjusted for other X's e.g. r (X1; X2 / X3). The partial correlation coefficient can be viewed as an adjustment of the simple correlation taking into account the effect of a control variable: r(X ; Y / Z ) i.e. correlation between X and Y controlled for Z. The multiple correlation coefficient between one X and several other X's e.g. r (X1 ; X2 , X3 , X4) is a measure of association between one variable and several other variables r (Y ; X1, X2, , Xk). The multiple correlation coefficient between Y and X1, X2,, Xk is defined as the simple Pearson correlation coefficient r (Y ; Yfit) between Y and its fitted value in the regression model: Y = β0 + β1X1+ βkXk + residual. The square of r (Y; X1, , Xk ) is interpreted as the proportion of variability in Y that can be explained by X1, , Xk. The null hypothesis [H 0 : ρ ( : X1, , Xk) = 0] is tested with the F-test for overall regression as it is in the multivariate regression model (see above) 6 , 7 . The multiple-partial correlation coefficient between one X and several other X`s adjusted for some other X's e.g. r (X1 ; X2 , X3 , X4 / X5 , X6 ). The multiple partial correlation coefficient equal the relative increase in % explained variability in Y by adding X1,, Xk to a model already containing Z1, , Zρ as predictors 6 , 7 .

Other interesting cases of multiple linear regression analysis include: the comparison of two group means. If for example we wish to answer the question if mean HEIGHT differs between men and women? In the simple linear regression model:

Testing β1 = 0 is equivalent with testing

HEIGHT MEN sub> = HEIGHT WOMEN by means of Student's t-test

The linear regression model assumes a normal distribution of HEIGHT in both groups, with equal . This is exactly the model of the two-sample t-test. In the case of comparison of several group means, we wish to answer the question if mean HEIGHT differ between different SES classes?

SES: 1 (low); 2 (middle) and 3 (high) (socioeconomic status)

We can use the following linear regression model:

Then β 1 and β 2 are interpreted as:

β 1 = difference in mean HEIGHT between low and high class

β 2 = difference in mean HEIGHT between middle and high class

Testing β 1 = β 2 = 0 is equivalent with the one-way ANalysis Of VAriance F-test . The statistical model in both cases is in fact the same 4 , 6 , 7 , 9 .

Analysis of covariance (ANCOVA)

If we wish to compare a continuous variable Y (e.g. HEIGHT) between groups (e.g. men and women) corrected (adjusted or controlled) for one or more covariables X (confounders) (e.g. X = age or weight) then the question is formulated: Are means of HEIGHT of men and women different, if men and women of equal weight are compared? Be aware that this question is different from that if there is a difference between the means of HEIGHT for men and women? And the answers can be quite different! The difference between men and women could be opposite, larger or smaller than the crude if corrected. In order to estimate the corrected difference the following multiple regression model is used:

where Y: response variable (for example HEIGHT); Z: grouping variable (for example Z = 0 for men and Z = 1 for women); X: covariable (confounder) (for example weight).

So, for men the regression line is y = β 0 + β 2 and for women is y = (β 0 + β 1 ) + β 2 .

This model assumes that regression lines are parallel. Therefore β 1 is the vertical difference, and can be interpreted as the: for X corrected difference between the mean response Y of the groups. If the regression lines are not parallel, then difference in mean Y depends on value of X. This is called "interaction" or "effect modification" .

A more complicated model, in which interaction is admitted, is:

regression line men: y = β 0 + β 2

regression line women: y = (β 0 + β 1 )+ (β 2 + β 3 )X

The hypothesis of the absence of "effect modification" is tested by H 0 : 3 = 0

As an example, we are interested to answer what is - the corrected for body weight - difference in HEIGHT between men and women in a population sample?

We check the model with interaction:

By testing β 3 =0, a p-value much larger than 0.05 was calculated. We assume therefore that there is no interaction i.e. regression lines are parallel. Further Analysis of Covariance for ≥ 3 groups could be used if we ask the difference in mean HEIGHT between people with different level of education (primary, medium, high), corrected for body weight. In a model where the three lines may be not parallel we have to check for interaction (effect modification) 7. Testing the hypothesis that coefficient of interactions terms equal 0, it is reasonable to assume a model without interaction. Testing the hypothesis H 0 : β 1 = β 2 = 0, i.e. no differences between education level when corrected for weight, gives the result of fitting the model, for which the P-values for Z 1 and Z 2 depend on your choice of the reference group. The purposes of ANCOVA are to correct for confounding and increase of precision of an estimated difference.

In a general ANCOVA model as:

where Y the response variable; k groups (dummy variables Z 1 , Z 2 , , Z k-1 ) and X 1 , , X p confounders

there is a straightforward extension to arbitrary number of groups and covariables.

Coding categorical predictors in regression

One always has to figure out which way of coding categorical factors is used, in order to be able to interpret the parameter estimates. In "reference cell" coding, one of the categories plays the role of the reference category ("reference cell"), while the other categories are indicated by dummy variables. The β's corresponding to the dummies that are interpreted as the difference of corresponding category with the reference category. In "difference with overall mean" coding in the model of the previous example: [Y = β 0 + β 1 1 + β 2 2 ++ residual], the β 0 is interpreted as the overall mean of the three levels of education while β 1 and β 2 are interpreted as the deviation of mean of primary and medium from overall mean, respectively. The deviation of the mean of high level from overall mean is given by (- β 1 - β 2 ). In "cell means" coding in the previous model (without intercept): [Y = β 0 + β 1 1 + β 2 2 + β 3 3 + residual], β 1 is the mean of primary, β 2 the middle and β 3 of the high level education 6 , 7 , 9 .

Conclusions

It is apparent to anyone who reads the medical literature today that some knowledge of biostatistics and epidemiology is a necessity. The goal in any data analysis is to extract from raw information the accurate estimation. But before any testing or estimation, a careful data editing, is essential to review for errors, followed by data summarization. One of the most important and common question is if there is statistical relationship between a response variable (Y) and explanatory variables (Xi). An option to answer this question is to employ regression analysis. There are various types of regression analysis. All these methods allow us to assess the impact of multiple variables on the response variable.

Quantitative Research Methods for Political Science, Public Policy and Public Administration: 4th Edition With Applications in R

11 introduction to multiple regression.

In the chapters in Part 3 of this book, we will introduce and develop multiple ordinary least squares regression – that is, linear regression models using two or more independent (or explanatory) variables to predict a dependent variable. Most users simply refer to it as “multiple regression”. 20 This chapter will provide the background in matrix algebra that is necessary to understand both the logic of, and notation commonly used for, multiple regression. As we go, we will apply the matrix form of regression in examples using R to provide a basic understanding of how multiple regression works. Chapter 12 will focus on the key assumptions about the concepts and data that are necessary for OLS regression to provide unbiased and efficient estimates of the relationships of interest, and it will address the key virtue of multiple regressions – the application of “statistical controls” in modeling relationships through the estimation of partial regression coefficients. Chapter 13 will turn to the process and set of choices involved in specifying and estimating multiple regression models, and to some of the automated approaches to model building you’d best avoid (and why). Chapter 13 turns to some more complex uses of multiple regression, such as the use and interpretation of “dummy” (dichotomous) independent variables, and modeling interactions in the effects of the independent variables. Chapter 14 concludes this part of the book with the application of diagnostic evaluations to regression model residuals, which will allow you to assess whether key modeling assumptions have been met and – if not – what the implications are for your model results. By the time you have mastered the chapters in this section, you will be well primed for understanding and using multiple regression analysis.

11.1 Matrix Algebra and Multiple Regression

Matrix algebra is widely used for the derivation of multiple regression because it permits a compact, intuitive depiction of regression analysis. For example, an estimated multiple regression model in scalar notion is expressed as: \(Y = A + BX_1 + BX_2 + BX_3 + E\) . Using matrix notation, the same equation can be expressed in a more compact and (believe it or not!) intuitive form: \(y = Xb + e\) .

In addition, matrix notation is flexible in that it can handle any number of independent variables. Operations performed on the model matrix \(X\) , are performed on all independent variables simultaneously. Lastly, you will see that matrix expression is widely used in statistical presentations of the results of OLS analysis. For all these reasons, then, we begin with the development of multiple regression in matrix form.

11.2 The Basics of Matrix Algebra

A matrix is a rectangular array of numbers with rows and columns. As noted, operations performed on matrices are performed on all elements of a matrix simultaneously. In this section we provide the basic understanding of matrix algebra that is necessary to make sense of the expression of multiple regression in matrix form.

11.2.1 Matrix Basics

The individual numbers in a matrix are referred to as “elements”. The elements of a matrix can be identified by their location in a row and column, denoted as \(A_{r,c}\) . In the following example, \(m\) will refer to the matrix row and \(n\) will refer to the column.

\(A_{m,n} = \begin{bmatrix} a_{1,1} & a_{1,2} & \cdots & a_{1,n} \\ a_{2,1} & a_{2,2} & \cdots & a_{2,n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m,1} & a_{m,2} & \cdots & a_{m,n} \end{bmatrix}\)

Therefore, in the following matrix;

\(A = \begin{bmatrix} 10 & 5 & 8 \\ -12 & 1 & 0 \end{bmatrix}\)

element \(a_{2,3} = 0\) and \(a_{1,2} = 5\) .

11.2.2 Vectors

A vector is a matrix with single column or row. Here are some examples:

\(A = \begin{bmatrix} 6 \\ -1 \\ 8 \\ 11 \end{bmatrix}\)

\(A = \begin{bmatrix} 1 & 2 & 8 & 7 \\ \end{bmatrix}\)

11.2.3 Matrix Operations

There are several “operations” that can be performed with and on matrices. Most of the these can be computed with R , so we will use R examples as we go along. As always, you will understand the operations better if you work the problems in R as we go. There is no need to load a data set this time – we will enter all the data we need in the examples.

11.2.4 Transpose

Transposing, or taking the “prime” of a matrix, switches the rows and columns. 21 The matrix

Once transposed is:

\(A' = \begin{bmatrix} 10 & -12 \\ 5 & 1 \\ 8 & 0 \end{bmatrix}\)

Note that the operation “hinges” on the element in the upper right-hand corner of \(A\) , \(A_{1,1}\) , so the first column of \(A\) becomes the first row on \(A'\) . To transpose a matrix in R , create a matrix object then simply use the t command.

11.2.5 Adding Matrices

To add matrices together, they must have the same dimensions , meaning that the matrices must have the same number of rows and columns. Then, you simply add each element to its counterpart by row and column. For example:

\(A = \begin{bmatrix} 4 & -3 \\ 2 & 0 \end{bmatrix} + B = \begin{bmatrix} 8 & 1 \\ 4 & -5 \end{bmatrix} = A+B = \begin{bmatrix} 4+8 & -3+1 \\ 2+4 & 0+(-5) \end{bmatrix} = \begin{bmatrix} 12 & -2 \\ 6 & -5 \end{bmatrix}\)

To add matrices together in R , simply create two matrix objects and add them together.

See – how easy is that? No need to be afraid of a little matrix algebra!

11.2.6 Multiplication of Matrices

To multiply matrices they must be conformable , which means the number of columns in the first matrix must match the number of rows in the second matrix.

Then, multiply column elements by the row elements, as shown here:

\(A = \begin{bmatrix} 2 & 5 \\ 1 & 0 \\ 6 & -2 \end{bmatrix} * B = \begin{bmatrix} 4 & 2 & 1 \\ 5 & 7 & 2 \end{bmatrix} = A X B = \\ \begin{bmatrix} (2 X 4)+(5 X 5) & (2 X 2)+(5 X 7) & (2 X 1)+(5 X 2) \\ (1 X 4)+(0 X 5) & (1 X 2)+(0 X 7) & (1 X 1)+(0 X 2) \\ (6 X 4)+(-2 X 5) & (6 X 2)+(-2 X 7) & (6 X 1)+(-2 X 2) \end{bmatrix} = \begin{bmatrix} 33 & 39 & 12 \\ 4 & 2 & 1 \\ 14 & -2 & 2 \end{bmatrix}\)

To multiply matrices in R , create two matrix objects and multiply them using the \%*\% command.

11.2.7 Identity Matrices

The identity matrix is a square matrix with 1’s on the diagonal and 0’s elsewhere. For a 4 x 4 matrix, it looks like this:

\(I = \begin{bmatrix} 1 & 0 & 0 & 0 \\ 0 & 1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \end{bmatrix}\)

It acts like a 1 in algebra; a matrix ( \(A\) ) times the identity matrix ( \(I\) ) is \(A\) . This can be demonstrated in R .

Note that, if you want to square a column matrix (that is, multiply it by itself), you can simply take the transpose of the column (thereby making it a row matrix) and multiply them. The square of column matrix \(A\) is \(A'A\) .

11.2.8 Matrix Inversion

The matrix inversion operation is a bit like dividing any number by itself in algebra. An inverse of the \(A\) matrix is denoted \(A^{-1}\) . Any matrix multiplied by its inverse is equal to the identity matrix:

For example,

\(A = \begin{bmatrix} 1 & -1 \\ -1 & -1 \end{bmatrix} \text{and } A^{-1} = \begin{bmatrix} 0.5 & -0.5 \\ -0.5 & 0.5 \end{bmatrix} \text{therefore } A*A^{-1} = \begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix}\)

However, matrix inversion is only applicable to a square (i.e., number of rows equals number of columns) matrix; only a square matrix can have an inverse.

Finding the Inverse of a Matrix

To find the inverse of a matrix, the values that will produce the identity matrix, create a second matrix of variables and solve for \(I\) .

\(A = \begin{bmatrix} 3 & 1 \\ 2 & 4 \end{bmatrix} X \begin{bmatrix} a & b \\ c & d \end{bmatrix} = \begin{bmatrix} 3a+b & 3c+d \\ 2a+4b & 2c+4d \end{bmatrix} = \begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix}\)

Set \(3a+b=1\) and \(2a+4b=0\) and solve for \(a\) and \(b\) . In this case \(a = \frac{2}{5}\) and \(b = -\frac{1}{5}\) . Likewise, set \(3c+d=0\) and \(2c+4d=1\) ; solving for \(c\) and \(d\) produces \(c=-\frac{1}{10}\) and \(d=\frac{3}{10}\) . Therefore,

\(A^{-1} = \begin{bmatrix} \frac{2}{5} & -\frac{1}{10} \\ -\frac{1}{5} & \frac{3}{10} \end{bmatrix}\)

Finding the inverse matrix can also be done in R using the solve command.

OK – now we have all the pieces we need to apply matrix algebra to multiple regression.

11.3 OLS Regression in Matrix Form

As was the case with simple regression, we want to minimize the sum of the squared errors, \(e\) . In matrix notation, the OLS model is \(y=Xb+e\) , where \(e = y-Xb\) . The sum of the squared \(e\) is:

Therefore, we want to find the \(b\) that minimizes this function:

To do this we take the derivative of \(e'e\) w.r.t \(b\) and set it equal to \(0\) .

Then to remove the \(-2\) ’s, we multiply each side by \(-1/2\) . This leaves us with:

To solve for \(b\) we multiply both sides by the inverse of \(X'X, (X'X)^{-1}\) . Note that for matrices this is equivalent to dividing each side by \(X'X\) . Therefore:

The \(X'X\) matrix is square, and therefore invertible (i.e., the inverse exists). However, the \(X'X\) matrix can be non-invertible (i.e., singular) if \(n < k\) —the number of \(k\) independent variables exceeds the \(n\) -size—or if one or more of the independent variables is perfectly correlated with another independent variable. This is termed perfect multicollinearity and will be discussed in more detail in Chapter 14. Also note that the \(X'X\) matrix contains the basis for all the necessary means, variances, and covariances among the \(X\) ’s.

Regression in Matrix Form

- \(y=n*1\) column vector of observations of the DV, \(Y\)

- \(\hat{y}=n*1\) column vector of predicted \(Y\) values

- \(X=n*k\) matrix of observations of the IVs; first column \(1\) s

- \(b=k*1\) column vector of regression coefficients; first row is \(A\)

- \(e=n*1\) column vector of \(n\) residual values

Using the following steps, we will use R to calculate \(b\) , a vector of regression coefficients; \(\hat y\) , a vector of predicted \(y\) values; and \(e\) , a vector of residuals.

We want to fit the model \(y = Xb+e\) to the following matrices:

\[ y = \begin{bmatrix} 6 \\ 11 \\ 4 \\ 3 \\ 5 \\ 9 \\ 10 \end{bmatrix}\quad X = \begin{bmatrix} 1 & 4 & 5 & 4 \\ 1 & 7 & 2 & 3 \\ 1 & 2 & 6 & 4 \\ 1 & 1 & 9 & 6 \\ 1 & 3 & 4 & 5 \\ 1 & 7 & 3 & 4 \\ 1 & 8 & 2 & 5 \end{bmatrix} \]

Create two objects, the \(y\) matrix and the \(X\) matrix.

Calculate \(b\) : \(b = (X'X)^{-1}X'y\) .

We can calculate this in R in just a few steps. First, we transpose \(X\) to get \(X'\) .

Then we multiply \(X\) by \(X'\) ; ( \(X'X\) ).

Next, we find the inverse of \(X'X\) ; \(X'X^{-1}\)

Then, we multiply \(X'X^{-1}\) by \(X'\) .

Finally, to obtain the \(b\) vector we multiply \(X'X^{-1}X'\) by \(y\) .

We can use the lm function in R to check and see whether our “by hand” matrix approach gets the same result as does the “canned” multiple regression routine:

Calculate \(\hat y\) : \(\hat y=Xb\) .

To calculate the \(\hat y\) vector in R , simply multiply X and b .

Calculate \(e\) .

To calculate \(e\) , the vector of residuals, simply subtract the vector \(y\) from the vector \(\hat y\) .

11.4 Summary

Whew! Now, using matrix algebra and calculus, you have derived the squared-error minimizing formula for multiple regression. Not only that, you can use the matrix form, in R , to calculate the estimated slope and intercept coefficients, predict \(Y\) , and even calculate the regression residuals. We’re on our way to true Geekdome!

Next stop: the key assumptions necessary for OLS to provide the best, unbiased, linear estimates (BLUE) and the basis for statistical controls using multiple independent variables in regression models.

It is useful to keep in mind the difference between “multiple regression” and “multivariate regression”. The latter predicts 2 or more dependent variables using an independent variable. ↩

The use of “prime” in matrix algebra should not be confused with the use of ``prime" in the expression of a derivative, as in \(X'\) . ↩

The Multiple Regression Analysis

- First Online: 04 September 2024

Cite this chapter

- Franz Kronthaler 2

In the previous chapter, we saw that usually one independent variable is not sufficient to adequately describe the dependent variable. Normally, several factors have an influence on the dependent variable. Hence, typically we need multiple regression analysis, also known as multivariate regression analysis, to describe an issue.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Author information

Authors and affiliations.

Hochschule Graubünden FHGR, Chur, Switzerland

Franz Kronthaler

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Franz Kronthaler .

Rights and permissions

Reprints and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer-Verlag GmbH, DE, part of Springer Nature

About this chapter

Kronthaler, F. (2024). The Multiple Regression Analysis. In: Statistics Applied with the R Commander. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-69107-6_20

Download citation

DOI : https://doi.org/10.1007/978-3-662-69107-6_20

Published : 04 September 2024

Publisher Name : Springer, Berlin, Heidelberg

Print ISBN : 978-3-662-69106-9

Online ISBN : 978-3-662-69107-6

eBook Packages : Mathematics and Statistics Mathematics and Statistics (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Regression Analysis

Regression analysis is a quantitative research method which is used when the study involves modelling and analysing several variables, where the relationship includes a dependent variable and one or more independent variables. In simple terms, regression analysis is a quantitative method used to test the nature of relationships between a dependent variable and one or more independent variables.

The basic form of regression models includes unknown parameters (β), independent variables (X), and the dependent variable (Y).

Regression model, basically, specifies the relation of dependent variable (Y) to a function combination of independent variables (X) and unknown parameters (β)

Y ≈ f (X, β)

Regression equation can be used to predict the values of ‘y’, if the value of ‘x’ is given, and both ‘y’ and ‘x’ are the two sets of measures of a sample size of ‘n’. The formulae for regression equation would be

Do not be intimidated by visual complexity of correlation and regression formulae above. You don’t have to apply the formula manually, and correlation and regression analyses can be run with the application of popular analytical software such as Microsoft Excel, Microsoft Access, SPSS and others.

Linear regression analysis is based on the following set of assumptions:

1. Assumption of linearity . There is a linear relationship between dependent and independent variables.

2. Assumption of homoscedasticity . Data values for dependent and independent variables have equal variances.

3. Assumption of absence of collinearity or multicollinearity . There is no correlation between two or more independent variables.

4. Assumption of normal distribution . The data for the independent variables and dependent variable are normally distributed

My e-book, The Ultimate Guide to Writing a Dissertation in Business Studies: a step by step assistance offers practical assistance to complete a dissertation with minimum or no stress. The e-book covers all stages of writing a dissertation starting from the selection to the research area to submitting the completed version of the work within the deadline. John Dudovskiy

Multiple Regression Analysis using SPSS Statistics

Introduction.

Multiple regression is an extension of simple linear regression. It is used when we want to predict the value of a variable based on the value of two or more other variables. The variable we want to predict is called the dependent variable (or sometimes, the outcome, target or criterion variable). The variables we are using to predict the value of the dependent variable are called the independent variables (or sometimes, the predictor, explanatory or regressor variables).

For example, you could use multiple regression to understand whether exam performance can be predicted based on revision time, test anxiety, lecture attendance and gender. Alternately, you could use multiple regression to understand whether daily cigarette consumption can be predicted based on smoking duration, age when started smoking, smoker type, income and gender.

Multiple regression also allows you to determine the overall fit (variance explained) of the model and the relative contribution of each of the predictors to the total variance explained. For example, you might want to know how much of the variation in exam performance can be explained by revision time, test anxiety, lecture attendance and gender "as a whole", but also the "relative contribution" of each independent variable in explaining the variance.

This "quick start" guide shows you how to carry out multiple regression using SPSS Statistics, as well as interpret and report the results from this test. However, before we introduce you to this procedure, you need to understand the different assumptions that your data must meet in order for multiple regression to give you a valid result. We discuss these assumptions next.

SPSS Statistics

Assumptions.

When you choose to analyse your data using multiple regression, part of the process involves checking to make sure that the data you want to analyse can actually be analysed using multiple regression. You need to do this because it is only appropriate to use multiple regression if your data "passes" eight assumptions that are required for multiple regression to give you a valid result. In practice, checking for these eight assumptions just adds a little bit more time to your analysis, requiring you to click a few more buttons in SPSS Statistics when performing your analysis, as well as think a little bit more about your data, but it is not a difficult task.

Before we introduce you to these eight assumptions, do not be surprised if, when analysing your own data using SPSS Statistics, one or more of these assumptions is violated (i.e., not met). This is not uncommon when working with real-world data rather than textbook examples, which often only show you how to carry out multiple regression when everything goes well! However, don’t worry. Even when your data fails certain assumptions, there is often a solution to overcome this. First, let's take a look at these eight assumptions:

- Assumption #1: Your dependent variable should be measured on a continuous scale (i.e., it is either an interval or ratio variable). Examples of variables that meet this criterion include revision time (measured in hours), intelligence (measured using IQ score), exam performance (measured from 0 to 100), weight (measured in kg), and so forth. You can learn more about interval and ratio variables in our article: Types of Variable . If your dependent variable was measured on an ordinal scale, you will need to carry out ordinal regression rather than multiple regression. Examples of ordinal variables include Likert items (e.g., a 7-point scale from "strongly agree" through to "strongly disagree"), amongst other ways of ranking categories (e.g., a 3-point scale explaining how much a customer liked a product, ranging from "Not very much" to "Yes, a lot").

- Assumption #2: You have two or more independent variables , which can be either continuous (i.e., an interval or ratio variable) or categorical (i.e., an ordinal or nominal variable). For examples of continuous and ordinal variables , see the bullet above. Examples of nominal variables include gender (e.g., 2 groups: male and female), ethnicity (e.g., 3 groups: Caucasian, African American and Hispanic), physical activity level (e.g., 4 groups: sedentary, low, moderate and high), profession (e.g., 5 groups: surgeon, doctor, nurse, dentist, therapist), and so forth. Again, you can learn more about variables in our article: Types of Variable . If one of your independent variables is dichotomous and considered a moderating variable, you might need to run a Dichotomous moderator analysis .

- Assumption #3: You should have independence of observations (i.e., independence of residuals ), which you can easily check using the Durbin-Watson statistic, which is a simple test to run using SPSS Statistics. We explain how to interpret the result of the Durbin-Watson statistic, as well as showing you the SPSS Statistics procedure required, in our enhanced multiple regression guide.

- Assumption #4: There needs to be a linear relationship between (a) the dependent variable and each of your independent variables, and (b) the dependent variable and the independent variables collectively . Whilst there are a number of ways to check for these linear relationships, we suggest creating scatterplots and partial regression plots using SPSS Statistics, and then visually inspecting these scatterplots and partial regression plots to check for linearity. If the relationship displayed in your scatterplots and partial regression plots are not linear, you will have to either run a non-linear regression analysis or "transform" your data, which you can do using SPSS Statistics. In our enhanced multiple regression guide, we show you how to: (a) create scatterplots and partial regression plots to check for linearity when carrying out multiple regression using SPSS Statistics; (b) interpret different scatterplot and partial regression plot results; and (c) transform your data using SPSS Statistics if you do not have linear relationships between your variables.

- Assumption #5: Your data needs to show homoscedasticity , which is where the variances along the line of best fit remain similar as you move along the line. We explain more about what this means and how to assess the homoscedasticity of your data in our enhanced multiple regression guide. When you analyse your own data, you will need to plot the studentized residuals against the unstandardized predicted values. In our enhanced multiple regression guide, we explain: (a) how to test for homoscedasticity using SPSS Statistics; (b) some of the things you will need to consider when interpreting your data; and (c) possible ways to continue with your analysis if your data fails to meet this assumption.

- Assumption #6: Your data must not show multicollinearity , which occurs when you have two or more independent variables that are highly correlated with each other. This leads to problems with understanding which independent variable contributes to the variance explained in the dependent variable, as well as technical issues in calculating a multiple regression model. Therefore, in our enhanced multiple regression guide, we show you: (a) how to use SPSS Statistics to detect for multicollinearity through an inspection of correlation coefficients and Tolerance/VIF values; and (b) how to interpret these correlation coefficients and Tolerance/VIF values so that you can determine whether your data meets or violates this assumption.

- Assumption #7: There should be no significant outliers , high leverage points or highly influential points . Outliers, leverage and influential points are different terms used to represent observations in your data set that are in some way unusual when you wish to perform a multiple regression analysis. These different classifications of unusual points reflect the different impact they have on the regression line. An observation can be classified as more than one type of unusual point. However, all these points can have a very negative effect on the regression equation that is used to predict the value of the dependent variable based on the independent variables. This can change the output that SPSS Statistics produces and reduce the predictive accuracy of your results as well as the statistical significance. Fortunately, when using SPSS Statistics to run multiple regression on your data, you can detect possible outliers, high leverage points and highly influential points. In our enhanced multiple regression guide, we: (a) show you how to detect outliers using "casewise diagnostics" and "studentized deleted residuals", which you can do using SPSS Statistics, and discuss some of the options you have in order to deal with outliers; (b) check for leverage points using SPSS Statistics and discuss what you should do if you have any; and (c) check for influential points in SPSS Statistics using a measure of influence known as Cook's Distance, before presenting some practical approaches in SPSS Statistics to deal with any influential points you might have.

- Assumption #8: Finally, you need to check that the residuals (errors) are approximately normally distributed (we explain these terms in our enhanced multiple regression guide). Two common methods to check this assumption include using: (a) a histogram (with a superimposed normal curve) and a Normal P-P Plot; or (b) a Normal Q-Q Plot of the studentized residuals. Again, in our enhanced multiple regression guide, we: (a) show you how to check this assumption using SPSS Statistics, whether you use a histogram (with superimposed normal curve) and Normal P-P Plot, or Normal Q-Q Plot; (b) explain how to interpret these diagrams; and (c) provide a possible solution if your data fails to meet this assumption.

You can check assumptions #3, #4, #5, #6, #7 and #8 using SPSS Statistics. Assumptions #1 and #2 should be checked first, before moving onto assumptions #3, #4, #5, #6, #7 and #8. Just remember that if you do not run the statistical tests on these assumptions correctly, the results you get when running multiple regression might not be valid. This is why we dedicate a number of sections of our enhanced multiple regression guide to help you get this right. You can find out about our enhanced content as a whole on our Features: Overview page, or more specifically, learn how we help with testing assumptions on our Features: Assumptions page.

In the section, Procedure , we illustrate the SPSS Statistics procedure to perform a multiple regression assuming that no assumptions have been violated. First, we introduce the example that is used in this guide.

A health researcher wants to be able to predict "VO 2 max", an indicator of fitness and health. Normally, to perform this procedure requires expensive laboratory equipment and necessitates that an individual exercise to their maximum (i.e., until they can longer continue exercising due to physical exhaustion). This can put off those individuals who are not very active/fit and those individuals who might be at higher risk of ill health (e.g., older unfit subjects). For these reasons, it has been desirable to find a way of predicting an individual's VO 2 max based on attributes that can be measured more easily and cheaply. To this end, a researcher recruited 100 participants to perform a maximum VO 2 max test, but also recorded their "age", "weight", "heart rate" and "gender". Heart rate is the average of the last 5 minutes of a 20 minute, much easier, lower workload cycling test. The researcher's goal is to be able to predict VO 2 max based on these four attributes: age, weight, heart rate and gender.

Setup in SPSS Statistics

In SPSS Statistics, we created six variables: (1) VO 2 max , which is the maximal aerobic capacity; (2) age , which is the participant's age; (3) weight , which is the participant's weight (technically, it is their 'mass'); (4) heart_rate , which is the participant's heart rate; (5) gender , which is the participant's gender; and (6) caseno , which is the case number. The caseno variable is used to make it easy for you to eliminate cases (e.g., "significant outliers", "high leverage points" and "highly influential points") that you have identified when checking for assumptions. In our enhanced multiple regression guide, we show you how to correctly enter data in SPSS Statistics to run a multiple regression when you are also checking for assumptions. You can learn about our enhanced data setup content on our Features: Data Setup page. Alternately, see our generic, "quick start" guide: Entering Data in SPSS Statistics .

Test Procedure in SPSS Statistics

The seven steps below show you how to analyse your data using multiple regression in SPSS Statistics when none of the eight assumptions in the previous section, Assumptions , have been violated. At the end of these seven steps, we show you how to interpret the results from your multiple regression. If you are looking for help to make sure your data meets assumptions #3, #4, #5, #6, #7 and #8, which are required when using multiple regression and can be tested using SPSS Statistics, you can learn more in our enhanced guide (see our Features: Overview page to learn more).

Note: The procedure that follows is identical for SPSS Statistics versions 18 to 28 , as well as the subscription version of SPSS Statistics, with version 28 and the subscription version being the latest versions of SPSS Statistics. However, in version 27 and the subscription version , SPSS Statistics introduced a new look to their interface called " SPSS Light ", replacing the previous look for versions 26 and earlier versions , which was called " SPSS Standard ". Therefore, if you have SPSS Statistics versions 27 or 28 (or the subscription version of SPSS Statistics), the images that follow will be light grey rather than blue. However, the procedure is identical .

Published with written permission from SPSS Statistics, IBM Corporation.