- Open access

- Published: 20 April 2012

The risk-benefit task of research ethics committees: An evaluation of current approaches and the need to incorporate decision studies methods

- Rosemarie D L C Bernabe 1 ,

- Ghislaine J M W van Thiel 1 ,

- Jan A M Raaijmakers 2 &

- Johannes J M van Delden 1

BMC Medical Ethics volume 13 , Article number: 6 ( 2012 ) Cite this article

16k Accesses

17 Citations

1 Altmetric

Metrics details

Research ethics committees (RECs) are tasked to assess the risks and the benefits of a trial. Currently, two procedure-level approaches are predominant, the Net Risk Test and the Component Analysis.

By looking at decision studies, we see that both procedure-level approaches conflate the various risk-benefit tasks, i.e., risk-benefit assessment, risk-benefit evaluation, risk treatment, and decision making. This conflation makes the RECs’ risk-benefit task confusing, if not impossible. We further realize that RECs are not meant to do all the risk-benefit tasks; instead, RECs are meant to evaluate risks and benefits, appraise risk treatment suggestions, and make the final decision.

As such, research ethics would benefit from looking beyond the procedure-level approaches and allowing disciplines like decision studies to be involved in the discourse on RECs’ risk-benefit task.

Peer Review reports

Research ethics committees (RECs) are tasked to do a risk-benefit assessment of proposed research with human subjects for at least two reasons: to verify the scientific/social validity of the research since an unscientific research is also an unethical research; and to ensure that the risks that the participants are exposed to are necessary, justified, and minimized [ 1 ].

Since 1979, specifically through the Belmont Report, the requirement for a “systematic, nonarbitrary analysis of risks and benefits” has been called for, though up to the present, commentaries about the lack of a generally acknowledged suitable risk-benefit assessment method continue [ 1 ]. The US National Bioethics Advisory Commission (US-NBAC), for example, stated the following in its 2001 report on Ethical and Policy issues in Research Involving Human Participants:

"An IRB’s 1

An institutional review board (IRB) is synonymous to an ethics committee. For consistency’s sake, we shall use REC throughout this paper.

assessment of risks and potential benefits is central to determining that a research study is ethically acceptable and would protect participants, which is not an easy task, because there are no clear criteria for IRBs to use in judging whether the risks of research are reasonable in relation to what might be gained by the research participant or society [ 2 ]."

The lack of a universally accepted risk-benefit assessment criteria does not mean that the research ethics literature says nothing about it. Within this same 2001 report, the US-NBAC recommended Weijer and Miller’s Component Analysis to RECs in evaluating clinical researches. As a reaction to Weijer and P. Miller, Wendler and F. Miller proposed the Net Risk Test. For convenience sake, we shall use the term “procedure-level approaches” [ 3 ] to refer to the models of Weijer et al. and Wendler et al.

In spite of their ideological differences, both procedure-level approaches are procedural in the sense that both approaches propose a step-by-step process in doing the risk-benefit assessment. In this paper, we shall not tackle their differences; rather, we are more interested in their similarities. We are of the position that both approaches fall short of providing an evaluation procedure that is systematic and nonarbitrary precisely because they conflate the various risk-benefit tasks, i.e., risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making [ 4 – 6 ]. As such, we recommend clarifying what these individual tasks refer to, and to whom these tasks must go. Lastly, we shall assert that RECs would benefit by looking into the current inputs of decision studies on the various risk-benefit tasks.

The procedure-level approaches

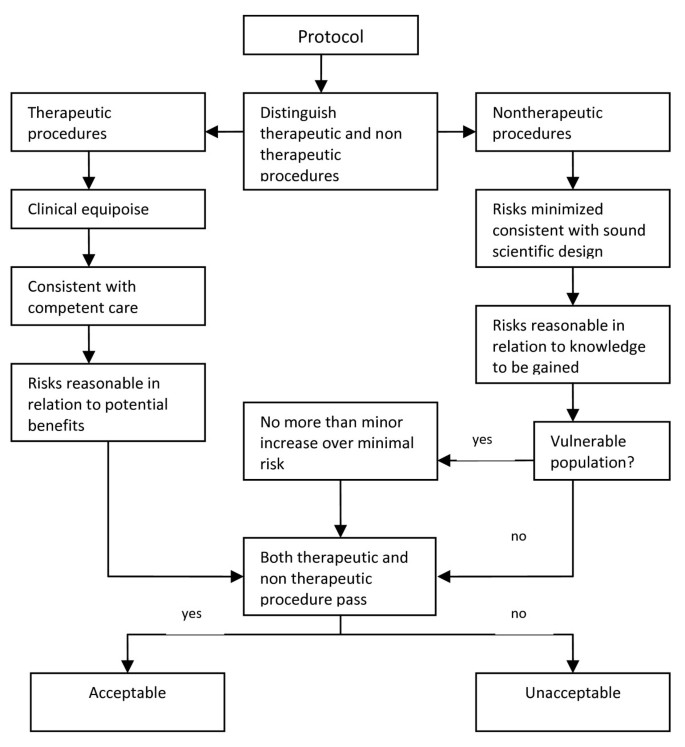

Charles Weijer and Paul Miller’s Component Analysis (Figure 1 ) requires research protocol procedures or “components” to be evaluated separately, since the probable benefits of one component must not be used to justify the risks that another component poses [ 2 ]. In this system, RECs would need to make a distinction between procedures in the protocol that are with and those that are without therapeutic warrant since therapeutic procedures would need to be analyzed differently compared to those that are non-therapeutic. It works on the assumption that a therapeutic warrant, that is, the reasonable belief that participants may directly benefit from a procedure, would justify more risks for the participants [ 7 ]. As such, therapeutic procedures ought to be evaluated based on the following conditions, in chronological order: that clinical equipoise exists, that is, that there is an “honest professional disagreement in the community of expert practitioners as to the preferred treatment” [ 8 ]; the “procedure is consistent with competent care; and risk is reasonable in relation to potential benefits to subjects” [ 7 ]. Non-therapeutic procedures, on the other hand, would need to be evaluated on the following conditions: the “risks are minimized and are consistent with sound scientific design; risks are reasonable in relation to knowledge to be gained; and if vulnerable population is involved, (there must be) no more than minor increase over minimal risk” [ 7 ]. Lastly, the REC would need to determine if both therapeutic and non-therapeutic procedures are acceptable [ 7 ]. If all components “pass”, then the “research risks are reasonable in relation to anticipated benefits” [ 7 ].

Component Analysis [ 7 , 9 ].

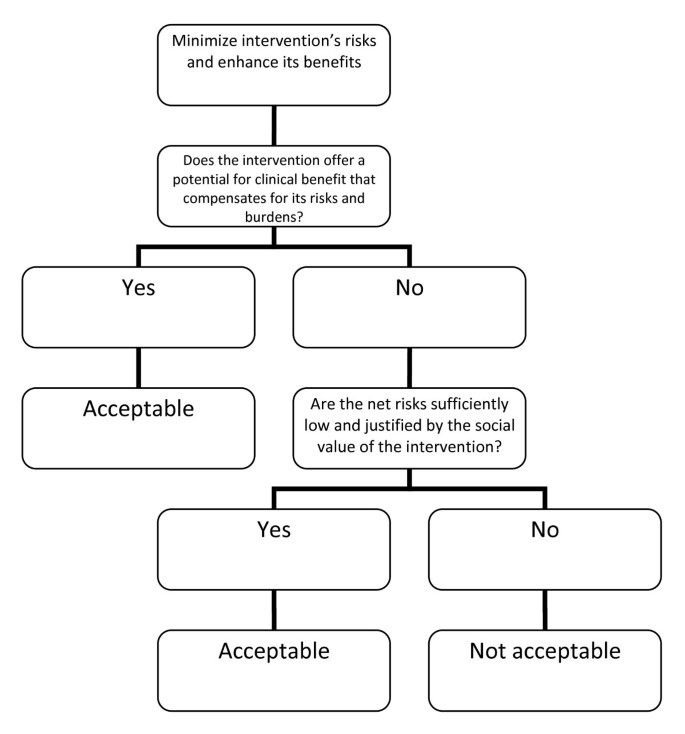

David Wendler and Franklin Miller, on the other hand, developed the Net-Risk Test (Figure 2 ) as a reaction to the Component Analysis. This system requires RECs to first “minimize the risks of all interventions included in the study” [ 10 ]. After which, the REC ought to review the remaining risks by first looking at each intervention in the study, and evaluating if the intervention “offers a potential for clinical benefit that compensates for its risks and burdens” [ 10 ]. If an intervention does offer a potential benefit that can compensate for the risks, then the intervention is acceptable; otherwise, the REC would need to determine whether the net risk is “sufficiently low and justified by the social value of the intervention” [ 10 ]. By net risk, they refer to the “risks of harm that are not, or not entirely, offset or outweighed by the potential clinical benefits for participants” [ 11 ]. If the net risks are sufficiently low and are justified by the social value of the intervention, then the intervention is acceptable; otherwise, it is not. Lastly, the REC would need to “calculate the cumulative net risks of all the interventions…and ensure that, taken together, the cumulative net risks are not excessive” [ 10 ].

The Net Risk Test [ 10 ].

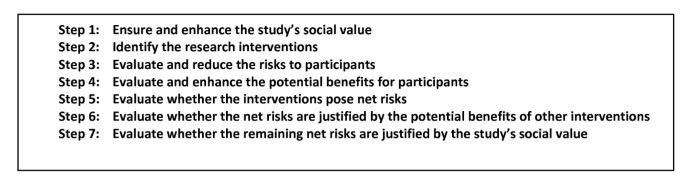

Recently, Rid and Wendler elaborated the Net Risk Test through a seven-step framework (see Figure 3 ) that is meant to offer a chronological, “systematic and comprehensive guidance” for the risk-benefit evaluations of RECs [ 11 ]. As we could see from Figure 3 , most of the steps are the same as that of the previously explained Net Risk Test; the main addition of the framework is the first step, which is to ensure and enhance the study’s social value. In this first step, Rid and Wendler meant that RECs, at the start of their risk-benefit evaluation, ought to “ensure the study methods are sound”; “ensure that the study passes a minimum threshold of social value”; and “enhance the knowledge to be gained from the study” [ 11 ]. It is only after the social value of the study has been identified, evaluated, and enhanced could the RECs identify the individual interventions and then go through the other steps, i.e., the steps we have earlier discussed in the Net Risk Test.

Seven-step framework for risk-benefit evaluations in biomedical research [ 11 ].

The procedure-level approaches and the conflation of risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making

These procedure-level approaches may be credited for providing some form of a framework for the risk-benefit assessment tasks of RECs. They have also provided RECs with a framework that includes and puts into perspective certain ethical concepts that may or may not have been considered in REC evaluations, but are now procedurally necessary concepts. Weijer and Miller, for example, made it necessary for RECs to always consider therapeutic warrant, equipoise, and minimal risk when evaluating the risk-benefit balance of a study. Wendler and Miller on the other hand, provided RECs with the concept of net risk. In spite of these contributions, these approaches presuppose (maybe unwittingly) that risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making can all be conflated. This, in our view, is a major error that ought to be corrected since from this error flow other problems, problems that unavoidably make the procedures unsystematic and arbitrary. To substantiate our view, we first have to make a necessary detour by discussing the distinction between risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making [ 4 , 5 ]. After which, we shall show how the conflation is present in the procedure-level approaches and how such a conflation leads to difficult problems.

Distinction between risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making

Decisions on benefits and risks in fact involve four activities: risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making [ 4 – 6 ]. In the current debate, these terms are used as if they are interchangeable. Precisely because these four activities have four different demands, it must be made clear that the problem is not merely on terminological preference; that is, the problem cannot be solved by simply “agreeing” to use one term over another. In risk studies, the risk-benefit task concretely demands four separate activities [ 4 , 6 ]. Hence, these terms are not interchangeable, and their order must be chronological. The distinctions among these tasks and the necessity of their chronological ordering are as follows.

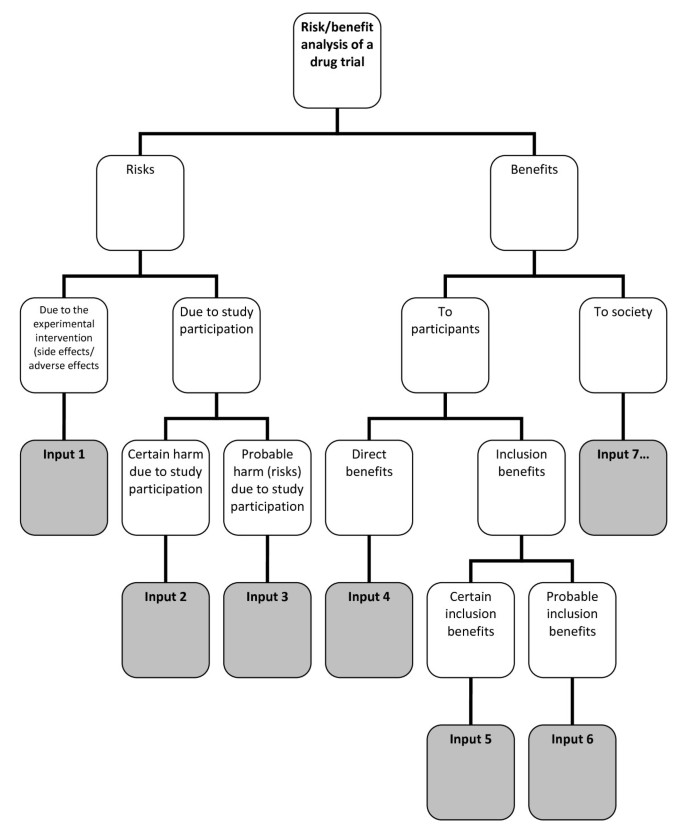

Risk-benefit analysis refers to the “systematic use of information to identify initiating events, causes, and consequences of these initiating events, and express risk (and benefit)” [ 4 ]. This, risk-benefit analysis refers to 1.) gathering of risk and benefit events, causes, and consequences; and 2.) presenting this wealth of information in a systematic and comprehensive way, in accordance with the purpose why such information is systematized in the first place. There are a number of risk analysis methods such as fault tree analysis, event tree analysis, Bayesian networks, Monte Carlo simulation, and others [ 4 ]. The multi criteria decision analysis (MCDA) method, mentioned by the EU Committee for Medicinal Products for Human Use (CHMP) in the Reflection Paper on Benefit Risk Assessment Methods in the Context of the Evaluation of Marketing Authorization Applications of Medicinal Products for Human Use [ 12 ] , proposes the use of a value tree in analyzing the risk-benefit balance of a drug, for example. Adjusted to drug trials, a drug trial risk-benefit analysis value tree could look like (Figure 4 ).

Risk-benefit analysis value tree .

In this value tree (Figure 4 ), we used King and Churchill’s typology of harms and benefits [ 1 ]. From each of the branches, the risk analyst would fill in information about a specific study. Of course, there could be more than one input under each category, depending on the nature of the drug trial being analyzed. Also, this value tree serves as an example; this is not the only way that benefits and risks may be analyzed within the context of drug trials. The best way to analyze risks and benefits within this context is something that ought to be further discussed and developed. Our aim is simply to show that a method such as a value tree is capable of encapsulating and framing the multidimensional nature of the causes and consequences of the benefits and risks of a study within one “tree.” This provides a functional risk-benefit picture from which the risks and the benefits may be evaluated, i.e., risk-benefit evaluation.

Risk-benefit evaluation refers to the “process of comparing risk (and benefit) against given risk (and benefit) criteria to determine the significance of the risk (and the benefit)” [ 4 ]. There are a number of methods to evaluate benefits and risks. Within the MCDA model for example, the “identification of the risk-benefit criteria; assessment of the performance of each option against the criteria; the assignment of weight to each criterion; and the calculation of the weighted scores at each level and the calculation of the overall weighted scores”[ 13 ] would constitute risk evaluation. The multriattribute utility theory (MAUT) is yet another example of an evaluation method. The MAUT is a theory that is basically “concerned with making tradoffs among different goals” [ 14 ]. This theory factors in human values, values defined as “the functions to use to assign utilities to outcomes” [ 14 ]. From the value tree “inputs,” the evaluator would then need to assign weights to each of these inputs. The purpose of plugging in weights is to establish the importance of each input, according to the evaluators. This is tantamount to establishing criteria, or identifying and making explicit the evaluators’ definition of acceptable risk. Next, the evaluators would need to plug in numerical values as the utility values of those that are being evaluated. These values would be multiplied to the weight. The latter values, when summed, would constitute the total utility value. To illustrate, if, for example, an REC wishes to make an evaluation of a psychotropic study drug and the standard drug, an REC may come up with MAUT chart like (Table 1 ).

Just like the value tree, our purpose is not to endorse only one way of doing the evaluation. Our purpose is merely to illustrate that such a decision study tool is capable of explicitly showing the following: a.) the inputs that the evaluators think must play a role in the evaluation; b.) the values of the evaluators, through the scores they have provided; c.) the importance they give to each of the factors/inputs through the weights that they have provided, d.) how the things compared (in this case, the study drug and the standard drug) fare given a, b and c ; and e.) a global perspective of what a, b, c, and d amount to, i.e., through the total utility value.

In the risk-benefit literature in research ethics, we find statements that such an algorithm is undesirable because it “yields one and only one verdict about the risk-benefit profile of each possible protocol” [ 11 ]. On this issue, CMHP’s Reflection is instructive. The scores in quantitative evaluations are valuable not because of some absolute value, but because these scores can

"…focus the discussion by highlighting the divergences between the assessors and stakeholders concerning choice for weights. The benefit of such analysis methods is that the degree and nature of these divergences can be assessed, even in advance of any compound’s review. The same method might be used with the weights (e.g., of different stakeholders) and make both the differences and the consequences of those differences more explicit. If the analyses agree, decision-makers can be more comfortable with a decision. If the analyses disagree, exact sources of the differences in view will be identified, and this will help focus the discussion on those topics [ 12 ]."

Thus, the scores are meant to allow the evaluators to know each others’ values, similarities, differences, and divergences. The divergences and differences could aid in focusing the REC discussion and figure out problem areas in a deliberate, transparent, coherent, and less intuitive manner [ 15 ].

Risk-benefit analysis and evaluation together constitute risk-benefit assessment [ 4 ] .

Once risks and benefits have been evaluated versus the evaluators’ given criteria, risk evaluation allows evaluators to decide “which risks need treatment and which do not” [ 6 ]. In decision studies, amplifying benefits and modifying risks are possible only after a global understanding of it through risk assessment has been achieved. Thus, after risk-benefit assessment comes risk treatment. By risk treatment, we refer to the “process of selection and implementation of measures to modify risk…measures may include avoiding, optimizing, transferring, or retaining risk” [ 4 ]. In terms of trials, risk treatment would refer to enhancing the trial’s social value, reducing the risks to the participants, and enhancing the participants’ benefits [ 11 ]. There may be concerns especially from REC members who have been used to minimizing risk immediately after its identification that this process necessitates them to suspend such move until risk evaluation is done, a procedure that may be counter-intuitive for some. However, the process of “immediately cutting the risks” also have passed through the process of evaluation, although intuitively and implicitly. An REC member who says that the risks of a certain procedure may be minimized or that the risks are unnecessary given the research question has already implicitly gone through a personal evaluation of what is and what is not necessary in such a clinical trial.

After investigating on the possibilities to modify risk and amplify the benefits, the decision makers would then have to finally decide whether the risks of the trial are justified given the benefits. By decision making , we refer to the final discussion of the REC on whether benefits truly outweigh risks, i.e., given all the information provided, are the risks of the trial ethically acceptable due to the merits of the probable benefits?

It is important to note that in the risk literature [ 4 , 13 ], the CHMP Reflection [ 12 ], and the CIOMS report [ 16 ], the risk-benefit tasks are assumed to be done interdependently and that the tasks are reflective of various values, interests, and ethical perspectives. At least for marketing authorization and marketed drug evaluation purposes, the sponsor and/or the investigator are assumed to be responsible for risk-benefit assessment and to a certain extent, the proposal of risk treatment measures. It makes a lot of sense that the sponsor ought to be responsible for risk analysis precisely because in this task, “experts on the systems and activities being studied are usually necessary to carry out the analysis” [ 4 ]. The regulatory authorities, on the other hand, are expected to provide guidelines for the risk-benefit analysis criteria. They also ought to provide their own version of risk-benefit evaluation to determine areas of divergences and differences, to extensively discuss risk treatment measures and options, and finally to deliberate and decide based on all these inputs.

Conflation of the various risk-benefit tasks by the procedure-level approaches

At the most superficial level, we notice that Wendler and Rid used the terms “risk-benefit assessment” and “risk-benefit evaluation” interchangeably to refer to the one and the same Net Risk Test [ 11 , 17 ]. Nevertheless, it could be argued that this is just a matter of misuse of terms, and that such does not substantially affect the approach that is proposed. Thus, we would need to look deeper into the Net Risk Test to justify our claim that it conflates the various risk-benefit tasks.

In the latest seven-step framework of the Net Risk Test, what ought to be a framework for risk-benefit evaluation of RECs ended up incorporating aspects of risk-benefit assessment, risk treatment, and decision making. The first step, that is, ensuring and enhancing the study’s social value, is risk treatment. The second step, that is, identifying the research interventions, is risk analysis. The third and fourth steps, which are the evaluation and reduction of risks to participants, and the evaluation and enhancing of potential benefits to participants, both fall into risk-benefit evaluation and risk treatment. It is worthwhile to note that in the Net Risk Test, the evaluation and the treatment of risks and benefits were not preceded by the identification of these risks and benefits; instead, prior to the third and fourth steps is the step to identify research interventions, a necessary but incomplete step in risk-benefit analysis. The fifth step, that is, the evaluation whether the interventions pose net risks, is risk-benefit evaluation. The sixth step, which is to evaluate whether the net risks are justified by the potential benefits of other interventions, is decision making. The last step, which is to evaluate whether the remaining net risks are justified by the study’s social value, is also decision making. Thus, the Net Risk Test in principle encompasses all the risk-benefit tasks without taking into account the distinctions, the chronological order among the various tasks, nor the division of labor in the various risk-benefit tasks.

The Component Analysis, just like the Net Risk Test, does the same conflation. In the process of distinguishing procedures into either therapeutic or non-therapeutic, the REC members would first need to identify the procedures to assess, i.e., risk analysis. The REC members would then need to evaluate therapeutic procedures differently compared to non-therapeutic procedures. Therapeutic procedures have to be evaluated on whether clinical equipoise exists, and whether the procedure is consistent with competent care. These two criteria may be considered as ethical principles that ought to be present in the deliberation towards decision making. Thus, these are decision making tasks. Next, the REC members would need to determine if the therapeutic procedure is reasonable in relation to the potential benefits to subjects. Since REC members need to answer questions of “reasonability,” this is a decision making task that presupposes risk-benefit evaluation. Non-therapeutic procedures, on the other hand, would necessitate the assessor to evaluate if risks are minimized and if risks are consistent with sound scientific design. This is risk treatment. Next, the assessor would need to verify if the risk of the non-therapeutic procedure is reasonable in relation to knowledge to be gained. Again, this is a decision making task that presupposes risk-benefit evaluation. In cases where vulnerable patients are involved, the REC members would need to verify if no more than minor increase over minimal risk is involved; this is a discussion that is likely to be present in the deliberation towards decision making, which also presupposes risk-benefit evaluation. Lastly, the assessor would need to make a decision if both therapeutic and non-therapeutic procedures pass. This is decision making. Hence, again, what we have is a system that touches on each of the risk-benefit tasks without making a distinction among the various tasks.

Since the risk-benefit tasks are conflated, the various tasks are necessarily simplified and confused. We have seen that the various risk-benefit tasks are resource intensive (since various experts must be involved), necessarily complex (since a drug trial is rarely simple), and time consuming. This is the reason why they are done separately. To conflate the various tasks into one system that ought to be accomplished within the few hours that the REC convenes is an impossibility. Precisely because of this conflation, plus the consideration that all the risk-benefit tasks ought to be done within the time restrictions of an REC, both procedure-level approaches cursorily and confusedly “accomplish” the various tasks. As such, we cannot expect the procedure-level approaches to have the same level of robustness, transparency, explicitness, and coherence as the various approaches of decision studies have. Neither of the procedure-level approaches could have the same robustness that the value-tree had, for example, in expressing and illustrating the relations between the nature, cause, consequences, as well as the uncertainties, of both risk and benefit components. Neither is also transparent, explicit, and rigorous enough to capture the acceptable risk definitions and the various weights and scores that are reflective of the various values and ethical dispositions that the MAUT method provided. The two procedure-level approaches simply do not require evaluators to be explicit in terms of their evaluative values. Though risk treatment is largely present in both procedure-level approaches, risk treatment, at least in the Net Risk Test, is sometimes confounded with risk evaluation. In the procedure-level approaches, RECs would also not have the benefit of systematically focusing the discussion on divergences and differences that a good risk evaluation makes possible. Lastly, because of the conflation and confusion of the various risk-benefit tasks, REC members are left to their own devices and intuition to decide on what is important to discuss and which is not, and eventually, to decide if the risks are justifiable relative to the benefits. Such a “procedure” could be categorized as a “taking into account and bearing in mind” process, a process that Dowie rightfully criticized as vague, general, and plainly intuitive [ 15 ].

Recommendations

We have seen that the methods from decision studies are more robust, transparent, and coherent than any of the procedure-level approaches. This is not surprising considering the fact that decision studies have been utilized in many various fields for quite some time now. The robustness of the decision studies methods stems from the clear distinction between risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making. In decision studies, each of the risk-benefit tasks is a system in itself that ought not to be conflated. In addition, in contrast to “taking into account and bearing in mind” processes, decision studies encourage the exposure of beliefs and values [ 15 ] precisely because it is from this explicitness that discussions can be defined and ordered. As such, we recommend the following:

RECs should make clear what their task is. RECs do not have the time and are not in the best position to do risk analyses. As such, risk analysis must be a task for the sponsor. As regards risk evaluation, RECs ought to provide their own risk-benefit evaluation to pair with the sponsor’s/investigator’s evaluation since this is the best way to systematically point out areas of divergence/convergence. These areas would aid in putting order in REC discussions. The evaluation of risk treatment suggestions and possibly coming up with a revised or different risk treatment appraisal ought to also form part of REC discussions. Lastly, it is obviously the REC’s task to make the final decision on whether the risks of the trial are justified given the benefits.

Precisely because such a clarification of tasks is so essential if the REC is to function efficiently, RECs must look into how decision studies may be incorporated in its risk-benefit tasks. This is something we will do in our next article. For now, it is imperative to lay the theoretical groundwork for the urgency of such incorporation.

The procedure-level approaches emphasize on the role of the various ethical concepts such as net risk, minimum risk, clinical equipoise, in the risk-benefit task of RECs. These are legitimate concerns; nevertheless, RECs must know when these concepts play a role in the various risk-benefit tasks. Minimal risk, for example, is a concept that ought to be present in risk treatment and/or deliberation towards final decision making.

Both the Net Risk Test and the Component Analysis conflate risk-benefit analysis, risk-benefit evaluation, risk treatment, and decision making. This makes the risk-benefit task of RECs confusing, if not impossible. It is necessary to make a distinction between these four different tasks if RECs are to be clear about what their task truly is. By looking at decision studies, we realize that RECs ought to evaluate risks and benefits, appraise risk treatment suggestions, and make the final decision. Further clarification and elaboration of these tasks would necessitate research ethicists to look beyond the procedure-level approaches. It further requires research ethicists to allow decision studies discourses into the current discussion on the risk-benefit tasks of RECs. Admittedly, this would take a lot of time and research effort. Nevertheless, the discussion on the REC’s risk-benefit task would be more fruitful and democratic if research ethics opens its doors to other disciplines that could truly help clarify risk-benefit task distinctions.

King NM, Churchill LR: Assessing and comparing potential benefits and risks of harm. The Oxford textbook of clinical research ethics. Edited by: Emanuel E, Grady C, Crouch RA, Lie RA, Miller FG, Wendler D. 2008, Oxford University Press, New York, 514-26.

Google Scholar

US National Bioethics Advisory Commission: Ethical and Policy issues in Research Involving Human Participants. 2001

Westra AE, de Beufort ID: The merits of procedure-level risk-benefit assessment. 2011, Ethics and Human Research, IRB

Aven T: Risk analysis: asssessing uncertainties beyond expected values and probabilities. 2008, Wiley, Chichester

Book Google Scholar

Vose D: Risk analysis: a quantitative guide. 2008, John Wiley & Sons, Chichester, 3

European Network and Information Security Agency. Risk assessment. European Network and Information Security Agency. 2012, Available from: http://www.enisa.europa.eu/act/rm/cr/risk-management-inventory/rm-process/risk-assessment

Miller P, Weijer C: Evaluating benefits and harms in clinical research. Principles of Health Care Ethics, Second Edition. Edited by: Ashcroft RE, Dawson A, Draper H, McMillan JR. 2007, Wiley & Sons

Weijer C, Miller PB: When are research risks reasonable in relation to anticipated benefits?. Nat Med. 2004, 10 (6): 570-573. 10.1038/nm0604-570.

Article Google Scholar

Weijer C: When are research risks reasonable in relation to anticipated benefits?. J Law Med Ethics. 2000, 28: 344-361. 10.1111/j.1748-720X.2000.tb00686.x.

Wendler D, Miller FG: Assessing research risks systematically: the net risks test. J Med Ethics. 2007, 33 (8): 481-486. 10.1136/jme.2005.014043.

Rid A, Wendler D: A framework for risk-benefit evaluations in biomedical research. Kennedy Inst Ethics J. 2011 June, 21 (2): 141-179. 10.1353/ken.2011.0007.

European Medicines Agency -- Committee for Medicinal Products for Human Use. Reflection paper on benefit-risk assessment methods in the context of the evaluation of marketing authorization applications of medicinal products for human use. 2008

Mussen F, Salek S, Walker S: Benefit-risk appraisal of medicines. 2009, John Wiley & Sons, Chichester

Baron J: Thinking and deciding. 2008, Cambridge University Press, New York, 4

Dowie J: Decision analysis: the ethical approach to most health decision making. Principles of Health Care Ethics. Edited by: Ashcroft RE, Dawson A, Draper H, McMillan JR. 2007, John Wiley & Sons, 577-83.

Council for International Organizations of Medical Sciences’s Working Group IV. Benefit-risk balance for marketed drugs: evaluating safety signals. 1998

Rid A, Wendler D: Risk-benefit assessment in medical research– critical review and open questions. Law, Probability Risk. 2010, 9: 151-177. 10.1093/lpr/mgq006.

Pre-publication history

The pre-publication history for this paper can be accessed here: http://www.biomedcentral.com/1472-6939/13/6/prepub

Download references

Acknowledgements

This study was performed in the context of the Escher project (T6-202), a project of the Dutch Top Institute Pharma, Leiden, The Netherlands.

Author information

Authors and affiliations.

Julius Center for Health Sciences and Primary Care, Utrecht University Medical Center, Heidelberglaan 100, Utrecht, 3584CX, The Netherlands

Rosemarie D L C Bernabe, Ghislaine J M W van Thiel & Johannes J M van Delden

GlaxoSmithKline, Huis ter Heideweg 62, Zeist, 3705, LZ, The Netherlands

Jan A M Raaijmakers

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Rosemarie D L C Bernabe .

Additional information

Competing interests.

RB’s PhD project is funded by the Dutch Top Institute Pharma. JR works for and holds stocks in GlaxoSmithKline. JvD and GvT have no competing interests to declare.

Authors’ contributions

All authors were involved in the design of the manuscript. RB did the research and wrote the draft and final manuscript; GvT commented on the drafts, wrote parts of the manuscript, and approved the final version; JR commented on the drafts and approved the final version of the manuscript; JvD commented on the drafts and approved the final version of the manuscript. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Authors’ original file for figure 1

Authors’ original file for figure 2, authors’ original file for figure 3, authors’ original file for figure 4, authors’ original file for figure 5, rights and permissions.

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License ( http://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Reprints and permissions

About this article

Cite this article.

Bernabe, R.D.L.C., van Thiel, G.J.M.W., Raaijmakers, J.A.M. et al. The risk-benefit task of research ethics committees: An evaluation of current approaches and the need to incorporate decision studies methods. BMC Med Ethics 13 , 6 (2012). https://doi.org/10.1186/1472-6939-13-6

Download citation

Received : 05 April 2012

Accepted : 11 April 2012

Published : 20 April 2012

DOI : https://doi.org/10.1186/1472-6939-13-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Risk benefit assessment

- Ethics committee

- Decision theory

- Net risk test

- Component analysis

BMC Medical Ethics

ISSN: 1472-6939

- General enquiries: [email protected]

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Toward the Development of a Functional Analysis Risk Assessment Decision Tool

Neil deochand, rebecca r eldridge, stephanie m peterson.

- Author information

- Article notes

- Copyright and License information

Corresponding author.

Collection date 2020 Dec.

Risk-benefit analyses are essential in the decision-making process when selecting the most effective and least restrictive assessment and treatment options for our clients. Clinical expertise, informed by the client’s preferences and the research literature, is needed in order to weigh the potential detrimental effects of a procedure against its expected benefits. Unfortunately, safety recommendations pertaining to functional analyses (FAs) are scattered or not consistently reported in the literature, which could lead some practitioners to misjudge the risks of FA. We surveyed behavior analysts to determine their perceived need for a risk assessment tool to evaluate risks prior to conducting an FA. In a sample of 664 Board Certified Behavior Analysts (BCBAs) and doctoral-level Board Certified Behavior Analysts (BCBA-Ds), 96.2% reported that a tool that evaluated the risks of proceeding with an FA would be useful for the professional practice of applied behavior analysis. We then developed an interactive tool to assess risk, which provides suggestions to mitigate the risks of an FA and validity recommendations. Subsequently, an expert panel of 10 BCBA-Ds reviewed the tool. Experts suggested that it was best suited as an instructional resource for those learning about the FA process and as a supporting resource for early practitioners’ clinical decision making.

Electronic supplementary material

The online version of this article (10.1007/s40617-020-00433-y) contains supplementary material, which is available to authorized users.

Keywords: Clinical decision making, Ethical practice, Functional analysis, Risk assessment, Safety precautions

Demand continues to grow for Board Certified Behavior Analysts (BCBAs) and their expertise (Behavior Analyst Certification Board [BACB], 2018 ; Deochand & Fuqua, 2016 ). This demand has created a “supply” issue, in that there is a shortage of BCBAs. The BACB reports that a vast majority of individuals certified as behavior analysts have been certified for 5 years or less (BACB, n.d. ). This heightened demand, coupled with the junior status of many practicing behavior analysts, creates a need for tools that will continue to support the professional practice of behavior analysis. Determining the needs of behavior analysts requires intermittently conducting job analyses and expert panel reviews (Shook, Johnston, & Mellichamp, 2004 ), in addition to examining ongoing challenges encountered by those in the field.

Two surveys published in the Journal of Applied Behavior Analysis presented cause for concern, because a majority of certified behavior analysts (BCBAs, as well as Board Certified Assistant Behavior Analysts [BCaBAs] and doctoral-level BCBAs [BCBA-Ds]) reported they were facing barriers to conducting functional analyses (FAs) in practice, despite endorsing FAs as the most informative tool in the functional behavior assessment arsenal (Oliver, Pratt, & Normand, 2015 ; Roscoe, Phillips, Kelly, Farber, & Dube, 2015 ). Oliver et al. ( 2015 ) reported that 62.6% of certified behavior analysts surveyed ( N = 682) indicated never or almost never using an FA. Similarly, Roscoe et al. ( 2015 ) reported that 61.9% of certified behavior analysts surveyed ( N = 205) had entire client caseloads where none or almost none had received an FA to inform treatment. The participants in the Oliver et al. ( 2015 ) survey reported insufficient time (57.4%), lack of space/materials (51.8%), lack of trained support staff (26.7%), and lack of administrative policies (24.9%) as the primary barriers to implementing FAs in practice. Roscoe et al. ( 2015 ) reported four primary barriers: lack of space (57.6%), lack of trained support staff (55.6%), lack of support or acceptance of the procedure (46.3%), and insufficient time or client availability (42.4%). A majority of participants indicated having prerequisite assessment training experience. For example, only 12% of the sample reported lacking “how-to” functional behavior assessment knowledge in the Oliver et al. ( 2015 ) sample, and 82.4% indicated serving as the primary therapist or data collector in an FA in the Roscoe et al. ( 2015 ) sample. These data seem to suggest that practitioners have been trained in FA technologies but are not implementing these technologies due to either resource issues or a lack of administrative support.

FAs allow practitioners to better identify functionally matched treatments for problem behavior that do not rely as heavily on aversive stimuli to achieve beneficial treatment outcomes (Neef & Iwata, 1994 ; Pelios, Morren, Tesch, & Axelrod, 1999 ). There is a continual growth of functional analysis technology (Schlichenmeyer, Roscoe, Rooker, Wheeler, & Dube, 2013 ). It is essential that practitioners keep abreast of these changes in technology over time to ensure FAs are appropriately used in practice. FAs can be conducted in a variety of environments in abridged or adjusted forms, such as the single-function, latency (Call, Pabico, & Lomas, 2009 ; Thomason-Sassi, Iwata, Neidert, & Roscoe, 2011 ), brief (Northup et al., 1991 ; Wallace & Iwata, 1999 ), and trial-based (Bloom, Iwata, Fritz, Roscoe, & Carreau, 2011 ) FAs, as well as FAs using synthesized conditions (Hanley, Jin, Vanselow, & Hanratty, 2014 ). Some of these developments may help ameliorate concerns practitioners identify about using FAs in practice. For example, some practitioners reported barriers to adequate time and space to conduct FAs or a lack of trained staff. However, this is not consistent with the literature. Training staff to implement an FA should not be an issue if teachers with no behavior-analytic experience can be trained with minimal performance feedback (Rispoli et al., 2015 ). If individuals like direct-care staff (Lambert, Bloom, Kunnavatana, Collins, & Clay, 2013 ), educators (Rispoli et al., 2015 ; Wallace, Doney, Mintz-Resudek, & Tarbox, 2004 ), and residential caregivers (Phillips & Mudford, 2008 ), all with various backgrounds and education levels, can be trained to conduct FAs, then training support staff familiar with applied behavior analysis should not be a barrier for using FAs in our growing field. Iwata and Dozier ( 2008 ) noted that the use of FAs can actually reduce the time it takes to receive effective treatment, as selecting an FA like the brief FA, latency-based FA, or trial-based FA can limit the amount of time individuals with problem behavior spend in assessment. It appears that practitioners are either not knowledgeable about these developments or not “connecting the dots” to see that there are strategies they can use to train staff, minimize the time required to conduct their assessment, or embed their assessment into the client’s ongoing routine (and, hence, natural environment) to ameliorate the need for a separate space for the FA.

For the FA to gain social acceptance, it must be used in practice when it is the best suited assessment procedure. Administrative support for conducting FAs might increase if administrators had more knowledge of the risks and benefits of the procedure. Recently, Wiskirchen, Deochand, and Peterson ( 2017 ) concluded that safety recommendations for FAs were not easily accessible to behavior analysts, which may make it difficult for behavior analysts to effectively judge whether it is safe to conduct an FA or even what factors they should consider in making this judgment. Researchers have found that safety recommendations are often not consistently reported (Weeden, Mahoney, & Poling, 2010 ) or they are scattered in the behavioral literature across numerous journals and books (Wiskirchen et al., 2017 ). Wiskirchen et al. ( 2017 ) advocated for a formalized risk assessment prior to conducting an FA and suggested four domains (clinical experience, behavior intensity, support staff, and environmental setting) that could be included in such a risk assessment. This “call to action” is timely given that clinical decision making surrounding FA safety precautions appears not to be transparent to those in and outside the field.

In recent years, Behavior Analysis in Practice has published decision trees to help guide behavior analysts, covering topics ranging from selecting measurement systems (LeBlanc, Raetz, Sellers, & Carr, 2016 ) and treatments for escape-maintained behavior (Geiger, Carr, & LeBlanc, 2010 ), to considering the ethical implications of interdisciplinary partnerships (Newhouse-Oisten, Peck, Conway, & Frieder, 2017 ). Such tools offer guidance and resources to support the growing number of behavior analysts in the field. A similar tool for evaluating the risk of FA and for pointing practitioners to helpful literature for decreasing risk could be helpful to the field. However, prior to developing the FA risk assessment tool, we first surveyed BCBAs to ascertain if they reported a need for such a tool. Based on those results, we developed a beta version of a comprehensive risk assessment tool for evaluating risk prior to conducting an FA. The tool suggested strategies for ameliorating risks, provided references to peer-reviewed literature on how to implement such risk-reduction strategies, and provided considerations to increase the validity of FAs in practice. Ten BCBA-Ds with several years of experience conducting FAs and who had contributed to the knowledge base on this topic reviewed the tool. Their feedback was used to develop a refined version of the tool. In this article, we describe the outcomes of the survey, describe the process for evaluation of the tool, and provide the most recent version of the risk assessment tool for practitioner use.

Phase 1: Needs Assessment

We surveyed behavior analysts to assess their professional opinion of the need for a formalized FA risk assessment tool. We also collected participants’ demographic data, as well as data on their experience conducting FAs.

Participants

A survey (described later) was distributed through the BACB’s mass e-mail service to BCBAs and BCBA-Ds. At the time the survey was disseminated, there were 2,088 BCBA-D and 23,582 BCBA certificants worldwide. The survey was sent out to all of these individuals. Of those behavior analysts who received the survey invitation, 708 started the survey, 664 completed the first half of the survey containing yes/no and demographic questions, and 596 (84% completed upon initiating the survey) completed the entire survey, including the Likert scale responses. Our sample was composed of 534 BCBAs (2.26% response rate) and 130 BCBA-Ds (6.2% response rate).

A 33-item response survey with an estimated 15- to 20-min time commitment was constructed by the authors using Qualtrics™ to assess the need for an FA risk assessment tool (see the survey in Supplemental Materials ). Demographic data were collected pertaining to certification level, age, gender, and years of experience in the field. Thematically, questions were related to experience with FAs, the perceived validity and utility of the FA, and barriers to and risks of the procedure. There were 11 yes/no questions followed by 15 Likert questions. A 5-point Likert scale ( strongly agree , agree , neutral , disagree , strongly disagree ) drop-down menu was used to evaluate participant agreement or disagreement with statements regarding FA use in practice. The final two yes/no questions allowed participants to type more detailed responses regarding how they currently evaluate risk prior to conducting an FA, and whether they believe colleagues do not conduct FAs even when it would be the best assessment option.

After accessing the survey using the e-mail link, participants could save and complete the survey online at any time, but after 3 months of inactivity, responses thus far in the survey were recorded (allowing data collection on partially completed surveys). Two months after the initial e-mail invitation, a second e-mail was sent so that anyone who had not participated in the survey could do so. This also served as a prompt to those who had started but not completed the survey in the first round. The survey was not searchable through search engine queries, so only those with an invite from the BACB® e-mail could participate. Additionally, to prevent multiple user responses generated from the same IP address, prevent “ballot box stuffing” was selected in the Qualtrics™ options. Survey data were separated by participants who completed the first half of the survey and participants who completed the entire survey (defined as having no more than two unanswered responses).

Survey Results and Discussion

Practitioner demographics.

Participants’ mean age was 39.2 years (range 24–78 years). Table 1 depicts participant demographic characteristics such as gender, certification type, age ranges, and years of experience in the field. There was a higher proportion of female participants, which comprised 81.2% ( n = 539) of the sample. Of those, 450 identified as BCBAs, and 89 identified as BCBA-Ds. Male participants represented 18.7% ( n = 124) of the sample, with 84 identifying as BCBAs and 40 as BCBA-Ds. One participant did not identify with the binary gender options. A large portion (31%) belonged to the 30- to 35-year-old age range. Approximately, 75% of the participants had fewer than 15 years of experience working in the field, which reflects the recent exponential growth of newly certified behavior analysts.

Participant demographic data (N = 664)

| Characteristic | Percentage | |

|---|---|---|

| Gender | ||

| Female Male Other | 539 124 1 | 81.17 18.67 0.15 |

| Certification | ||

| BCBA BCBA-D | 534 130 | 80.42 19.58 |

| Age range | ||

| 24–29 30–35 36–40 41–45 46–50 >50 | 100 207 131 70 53 100 | 15.13 31.32 19.82 10.59 8.02 15.13 |

| Years of experience in the field | ||

| 0–5 6–10 11–15 16–20 21–25 >25 | 175 195 126 76 40 52 | 26.36 29.37 18.98 11.45 6.02 7.83 |

Practitioners’ FA Use and Perceptions of Risk

As depicted in Table 2 , 95% of participants indicated that they had analyzed FA data, 91.9% had implemented treatment informed by FA results, 90.7% had assisted or observed an FA, 81.8% had designed an FA, and 69.4% had supervised an FA. In spite of this exposure, 78.2% of participants reported instances when they were unsure whether it was appropriate to conduct an FA. Similarly, 80.4% of the participants reported that if they conducted an FA, they would be concerned regarding the validity of the results. A clear majority (96.2%) of participants expressed a desire for a risk assessment tool that consolidates safety precautions for an FA. Of the sample, 94.7% expressed a need for a tool that determined risk and offered steps to mitigate this risk prior to FA implementation. Interestingly, 82.2% reported that they would be more willing to conduct an FA if a tool existed that offered safety precautions, validity recommendations, and other considerations. Roughly 66 participants did not complete the second half of the survey containing the Likert and final two yes/no questions, and their data are presented separately in Table 2 .

Participants’ responses to questions regarding FA experience

| Questions | Yes (%) | No (%) |

|---|---|---|

| 664 | ||

| Have you analyzed data from an FA? | 631 (95.0) | 33 (5.0) |

| Have you implemented treatment based on the results of an FA? | 610 (91.9) | 54 (8.1) |

| Have you assisted with or observed an FA? | 602 (90.7) | 62 (9.3) |

| Have you designed an FA? | 543 (81.8) | 121 (28.2) |

| Have you supervised an FA? | 461 (69.4) | 203 (30.6) |

| Were there times you were unsure whether it was appropriate to conduct an FA? | 519 (78.2) | 145 (21.8) |

| If you were to conduct an FA, would you be concerned about the validity of the results? | 534 (80.4) | 130 (19.6) |

| Have you heard of the term “risk assessment” in relation to conducting an FA? | 550 (82.8) | 114 (17.2) |

| Do you believe there is a need for a risk assessment tool that determines the risk of conducting an FA and offers ways to reduce risk posed in an FA? | 629 (94.7) | 35 (5.3) |

| Would a tool that helps evaluate when it would be safe to conduct an FA be useful for the field of applied behavior analysis? | 639 (96.2) | 25 (3.8) |

| Would you be more likely to conduct an FA if a tool existed that helped offer safety precautions, validity recommendations, and other considerations? | 546 (82.2) | 118 (17.8) |

| = 598 | ||

| Have you used a formalized (standard decision-making tool) risk assessment before conducting an FA? | 35 (5.8) | 563 (94.2) |

| Do you believe some behavior analysts do not conduct an FA when it is actually the best option to guide treatment? | 559 (93.5) | 39 (6.5)v |

Data for Likert scale responses are presented using a diverging stacked bar chart (see Figure 1 ). This chart is optimal for quick visual analysis of Likert data. The proportion of participant agreement with any given question shifts the positioning of the bar chart (i.e., proportionally more disagreement than agreement would shift the bar to the right and away from the statement on the left, and vice versa). Roughly 596 participants of the original 664 completed the entire survey including the Likert questions. Participants could leave two responses unfilled and still meet inclusion criteria. Statements in Figure 1 are organized by the total responses for each statement, as some participants did not provide an endorsement to the provided 5-point Likert scale by leaving an unfilled response.

Percentage agreement/disagreement regarding ABA-related statements

Most participants (99.2%) agreed or strongly agreed that the FA helps guide future treatment when other methods have failed. Also, a majority of the sample (78.4%) agreed or strongly agreed that FAs are the gold standard functional assessment. Interestingly, 88.4% of participants disagreed or strongly disagreed with the statement “I do not generally conduct FAs because when I do the results are usually wrong.” Most (90.8%) disagreed or strongly disagreed that an FA could only be used with individuals with developmental disabilities, and 88.3% disagreed that FAs could not be used with behaviors that were multiply controlled. Just under 73% disagreed or strongly disagreed that FAs were more of a research tool than something to be used in practice. In summary, there seemed to be strong agreement that FAs are robust and should be relied upon when needed to inform treatment.

A smaller majority (61.3% of participants) either agreed or strongly agreed with the statement that there is no standard way to assess the risk to the client when conducting an FA. There was strong agreement (88% of participants agreed or strongly agreed) that there is a need for more tools that guide clinical decision making as the field grows, and 84% of participants supported the need for more decision-making tools for effective clinical practice. Although a majority of the sample (87.3%) agreed or strongly agreed that they engage in a risk-benefit analysis prior to an FA, most (approximately 70%) agreed or strongly agreed that there is no tool to help decide which type of FA should be conducted. Opinions were divided regarding FAs being inherently risky, as 41.5% of participants agreed or strongly agreed with this statement, and 33.5% strongly disagreed or disagreed. In summary, there seemed to be general agreement that a decision-making tool that helped evaluate the risk of FA procedures would be useful.

Interestingly, 76.7% of participants agreed or strongly agreed that many of their peers have not conducted FAs. Just over 77% either agreed or strongly agreed that some behavior analysts avoid conducting FAs because they are labor intensive, but 87.2% of participants indicated that the reason was due to a lack of prerequisite skills to implement FAs. Only 598 participants completed both close-ended questions at the conclusion of the survey, which allowed participants to provide textual information if they wanted to offer additional context surrounding their responses. In the first question, about 94.2% of participants reported never using a formalized process to determine risk before conducting an FA. The responses to the second question indicated that roughly 93.5% of the sample believed some behavior analysts do not conduct FAs even when they may be the best option to guide treatment.

These data suggest there is a need for a structured support tool to help evaluate the risks of conducting an FA. They also support the potential utility of including with the tool recommendations to improve the validity of the FA procedure by designing FAs to meet the diverse needs of the clients for whom an FA is warranted, as well as cater to the settings and contexts in which FAs are conducted. In order of priority based on the perceived needs of the sample, our tool should (a) provide the various safety precautions for implementing an FA found in the literature, (b), offer a way to assess the risk of implementing an FA, (c) provide options to reduce risk, and (d) offer considerations regarding how to conduct a valid FA.

Phase 2: Tool Development

In order to develop the tool, we examined a large number of seminal articles and books referencing applications of and consideration for the FA. We relied on our original (Wiskirchen et al., 2017 ) review of the literature and reviewed any new articles that had come out since then. The resources we used are listed in Table 3 . We manually searched the journals and examined cross-citations within a reference. We selected references that pertained to safety recommendations for FAs, alternative experimental procedures rather than an FA that could be used to minimize risk, and validity issues that can arise when implementing FAs, as well as how these could be resolved. Earlier references were selected in favor of direct or systematic replications that did not provide further insight into variables that could influence safety. A complete list of the 83 references we used for this project can be found in the references tab of the tool in the Supplemental Materials .

List of sources for the references used in the tool in alphabetical order

| References | |

|---|---|

| Journals | |

| Advances in Learning and Behavioral Disabilities Analysis and Intervention in Developmental Disabilities Behavior Analysis in Practice Behavior Analysis: Research and Practice Behavior Modification Behavioral Intervention: Principles, Models, and Practices Behavioral Interventions Cognitive and Behavioral Practice Education and Training in Developmental Disabilities Education and Treatment of Children European Journal of Behavior Analysis Journal of Applied Behavior Analysis Journal of Autism and Developmental Disorders Journal of Behavioral Education Journal of Developmental and Physical Disabilities Journal of Early and Intensive Behavioral Intervention Journal of Positive Behavior Interventions Journal of the Association for Persons With Severe Handicaps Pediatric Clinics of North America Research in Developmental Disabilities The Behavior Analyst | 1 1 4 1 3 1 3 1 2 4 1 42 1 1 2 1 1 1 1 3 1 |

| Book/BACB | |

| BACB / Fourth and Fifth Edition Task Lists (BACB, , ) | 3 |

| Matson, J. L. (Ed.). ( ). New York, NY: Springer. | 4 chapters |

Bailey and Burch ( 2016 ) discuss components of a risk-benefit analysis and identify the following as considerations for risk as it relates to problem behavior: behavior that places the client or others at risk, appropriateness of the setting, clinician expertise, sufficiently trained personnel, buy-in from stakeholders, and other liabilities to the analyst. These suggestions correspond with Wiskirchen et al.’s ( 2017 ) four domains—clinical experience, behavioral intensity, FA environment, and support staff (see Figure 2 )—which served as our primary categories of risk for the tool. We attempted to quantify these four domains as objectively as possible. Within each of these four domains, we created six levels from which the practitioner could select. Six levels were selected as they conveniently helped categorize meaningful contextual differences that could impact risk. We used an aesthetic graduated blue-to-red color scale to denote low risk (blue) to high risk (red). For the clinical experience domain, we initially envisioned using a set number of FAs completed, but heuristically, behavior analysts might more easily remember the number of years they have been conducting FAs. Thus, we used time-based criteria for experience. The levels for experience ranged from 5 or more years of experience conducting FAs with different intensities and topographies to no experience conducting FAs. For FA environment , conceivably the safest environment in which to conduct an FA could contain padded walls, affixed furniture, and protective equipment. The least safe environment for an FA is one that has sharp, breakable glass objects; moveable and destructible furniture and materials; and small, ingestible choking hazards. For supporting personnel to assist with the FA, the ideal situation would be to have a medical doctor provide written documentation that states that momentary increases in problem behavior due to FA procedures pose little to no risk to the participant, as well as to have medical staff available to monitor the client during the FA and two to three well-trained support staff available during the FA. The least ideal situation might be to have no additional staff to assist with the FA and no medical oversight. For behavioral intensity , the target behaviors that produce the least risk of physical harm to the client or others are those behaviors that are low in intensity and rate, have not caused tissue/environmental damage in the past, and are unlikely to do so during an FA (e.g., off-task behavior, mild disruption, screaming). In each domain, there are instructions to assist the user in selecting the appropriate level of risk for his or her context. For example, the user is tasked with considering the ease of blocking the target behavior when evaluating behavior intensity. Target behaviors with the most risk of causing physical harm to the client or others are those that are high in intensity and rate, have previously caused injury to self or others, and are severe; therefore, these are challenging to block regardless of whether the topography is self-injurious behavior, aggression, pica, elopement, or inappropriate sexual behavior.

Microsoft screen depicting a low risk scenario

Next, we attempted to develop a way for these six domains to be evaluated in an interactive fashion. Specifically, it was our opinion that risk could not be determined by simply considering these four domains in a linear fashion. Rather, risk was either heightened or lessened based on how the four domains interacted with each other. For example, the risk of conducting an FA with a very intense, self-injurious behavior (e.g., severe head-banging) might be lowered by conducting the evaluation in the safest environment with highly experienced behavior analysts, medical oversight, and trained staff to assist in the evaluation. Conversely, risk is increased when attempting to conduct an analysis of severe self-injury with poorly trained staff and a BCBA with limited experience. We needed a mechanism to allow for these kinds of interactions. We determined this could be accomplished through Microsoft Excel®. This software allows for the use and integration of Visual Basic coding, as well as macros to create dynamic interactive programs. We programmed the domains to be dynamically interactive, whereby a higher risk in the experience domain or in the behavioral intensity domain inflates the overall risk to a greater extent than the other domains. This effect is magnified further if there is a higher risk in both of those categories, or if three or all four domains are selected as higher risk ratings. This was accomplished by using different formula algorithms for each risk rating. We created three versions of the tool to operate on Microsoft, Macintosh, and Android operating systems and, therefore, to avoid compatibility issues. Using Excel® makes the tool accessible to most people, because many applied behavior analysts often use Excel for creating single-subject graphs (Deochand, Costello, & Fuqua, 2015 ). Thus, most people should not require any additional software to operate the risk analysis tool.

When the user opens the tool, he or she will see multiple tabs at the bottom of the Excel® sheet. The first tab, “About the Tool,” contains basic instructions for how to operate the tool. There is also an option to use an interactive help menu using a macro shortcut, which will help answer additional questions a user may have regarding the tool. The second tab, “Risk Evaluation,” contains the interactive tool consisting of the four domains and six buttons for each domain to represent the levels of risk within each domain. The user can click on one button within each domain to represent the current situation with his or her client. The buttons range in color from blue (lower risk) to red (greater risk). At the same time, a “slider” below the domains incorporates the clicks from all these buttons to suggest the overall risk of the situation. Overall risk can range from slight, moderate, substantial, to high risk (see Figure 3 ).

Macintosh screen depicting a substantial-risk scenario

The third tab, “Risk Assessment,” contains suggestions for lowering risk specific to given concerns selected by the user, as well as a published reference the user can refer to for more information. If any of the user’s clicks on the risk buttons on the second tab (Risk Evaluation) result in higher risk (i.e., within the 4–6 range for a given domain), matching suggestions for lowering risk and references in the third tab turn red to highlight for the user what the suggestions for lowering risk are. The fourth and fifth tabs, “Validity” and “Considerations,” offer tips to the user for maintaining high levels of validity in the assessment. We used “if-then” scenarios to help guide the user in selecting appropriate considerations for the client. For example, after a high-risk scenario is detected, the tool might recommend selecting reinforcing precursor behavior in an FA (Lalli, Mace, Wohn, & Livezey, 1995 ); using a structural analysis (Stichter & Conroy, 2005 ), modified choice assessment (Berg et al., 2007 ), or reinforcer or punisher assessments; or complimenting a descriptive assessment with a contingency space analysis (Martens, DiGennaro, Reed, Szczech, & Rosenthal, 2008 ). Additionally, in the Validity and Considerations tabs are tables and figures from articles that offer guidance regarding contextual variables for structural analyses (Stichter & Conroy, 2005 ), idiosyncratic variables that impact FA outcomes (Schlichenmeyer et al., 2013 ), or side effects of medications (Valdovinos & Kennedy, 2004 ). The measurement decision tree by LeBlanc et al. ( 2016 ) was also offered if the user was unsure how best to track the target behavior. Our objective was to offer the supporting pieces that were requested by the participants from the survey in the risk assessment tool. It is not possible to extract all suggestions from the tool and present them within this article, but the reader is encouraged to download and interact with the tool as an educational resource. It is available in the Supplemental Materials site for the journal.

Phase 3: Expert Review and Revision

After we created the tool, we requested feedback from experts in conducting FAs to determine whether they considered (a) the selected domains appropriate for assessing risk, (b) each domain to have produced an appropriate overall risk level, (c) the suggestions for lowering risk and literature base appropriate, and (d) the suggestions for maintaining the validity of the FA appropriate.

Expert Reviewers

Experts were selected based on their notoriety in the field for publishing peer-reviewed research on FAs, operating a behavior-analytic research/training laboratory, or engaging in the clinical practice of FAs at state-of-the-art intensive treatment centers. We sent an initial request to complete a review of the tool to 58 experts. We received 10 full responses and 2 partial responses to our request. Experiential demographic data were collected on each of the experts. Four of the participants had more than 15 years of experience conducting FAs, three had 11 to 15 years of experience conducting FAs, two had 6 to 10 years of experience conducting FAs, and one had 1 to 5 years of experience conducting FAs. Similarly, four had more than 15 years of experience training others to conduct FAs, three had 6 to 10 years of experiencing training others, and three had 1 to 5 years of experience training others. After we received feedback from these experts and revised the tool, we sent the tool to these same 10 experts for a second round of review.

Guided Review Process and Results

We used a survey to receive guided input from the experts, as this helped ensure our experts responded to similar stimuli from the tool and therefore guide the content of their responses. The survey was created in Qualtrics. A link to the Qualtrics survey and a copy of the three versions of the tool in Excel were provided to the experts. The survey began by first orienting reviewers to the tool to ensure they could effectively use it and could locate all of the features that we had built. Then, we provided them with 12 specific scenario combinations of risk within the four domains and asked them to rate the appropriateness of the overall risk rating the tool produced for each specific scenario combination. Most of these combinations constituted more “middle-of-the-road” risk, as we assumed there would be higher agreement with recommendations at the extreme ends of the continuum. The “grayer” areas seemed to be those where the most feedback was warranted. We used a 3-point Likert scale that included It’s just right , Needs to be lower risk , and Needs to be higher risk . If the expert selected anything other than It’s just right , we asked the expert to comment on why/how the risk should be different. Finally, for each of the 12 combinations, we also asked the experts to rate the recommendations for reducing risk with a 5-point Likert scale that included not helpful , a little helpful , somewhat helpful , helpful , and very helpful . Following that question, the experts could type any additional comments for that scenario combination.

After the initial assigned combinations, experts were asked to interact with the tool, choosing any combinations for the two domains clinical expertise and behavior intensity, while support staff and environment were fixed at the highest risk setting. We chose these two domains in particular to get expert feedback on, as these were the most commonly listed barriers to implementing FAs in the previously published survey research (Oliver et al., 2015 ). For these combinations, we asked the experts to rate the appropriateness of the level of risk identified, and if they would change it, to provide a rationale. Finally, experts selected additional combinations they wanted to try out, and again we only asked them to rate the appropriateness of the risk, and if they would change it, to provide a rationale.

Finally, we asked open-ended questions about the four domains, if there were any risk factors they felt we missed, if the level of detail in the Risk Reduction tab was sufficient to carry out the suggested change, if the level of detail in the Risk Reduction tab and Considerations tab was appropriate, how accessible the tool was, whether the weighting we used for our risk scales was appropriate, if the tool was useful, and for whom the tool would be useful.

We then took the ratings and commentary provided by the experts and analyzed what, if any, changes should be made to the tool. For most of the scenarios, at least 8 of 10 experts scored the risk rating as It’s just right . If more than one expert provided feedback that something needed to be changed, the authors discussed the expert’s rationale, compared it to the feedback given by the other experts, examined the literature, and made a determination whether to change the tool and, if so, how. Examples of changes made included the following. Originally, we had four overall risk ratings that included “minimal,” “some,” “moderate,” and “high.” Many of the reviewers felt that “minimal” risk was too narrow and that there is always risk with an FA. This produced interesting and spirited discussion among the authors. Eventually, we decided to change the lowest risk category to “slight” risk. Another common piece of feedback was that some of our combinations resulted in ratings that were not high enough or ratings that were too high. In these cases, we adjusted the formulas in the tool to produce higher or lower ratings, whichever was appropriate given the experts’ feedback. Further, a few reviewers requested that we add additional considerations to the Risk Reduction tab for reducing risk. We responded to these by adding in the requested considerations. After we discussed and resolved all issues, we sent the modified tool and a second survey to the experts who had completed the first survey, asking that they review our changes and make further commentary.

The second survey focused only on the changes we made to the tool to address the experts’ concerns. We made two substantial changes to our tool—specifically changing the formulas to produce higher levels of risk and changing our wording for the lowest level of risk on the slider representing overall risk. We specifically asked reviewers to attend to these changes and provide feedback on them. We provided them with some risk factor combinations that had produced suggestions in our first round of reviews, and asked them to evaluate whether the overall level of risk was now appropriate or inappropriate, or whether they were neutral about the change. There was also an open-ended commentary box for them to provide comments. We also requested feedback on the updated wording of each risk rating to “some,” “moderate,” “high,” and “very high” risk (previously “minimal,” “some,” “moderate,” and “high” risk). We asked for feedback using the same 3-point Likert scale and commentary box. However, to take into account all the respective Likert and qualitative text data on the topic, we again revised the wording to “slight,” “moderate,” “substantial,” and “high” risk.

After the second round of expert reviews, we made a few additional minor changes using the same rules from before—that two or more reviewers had to agree on a change in order to prompt a revision. The tool presented here is the culmination of our review of the literature on risk assessments in FAs, methods of reducing risk in FAs, tool creation, two rounds of expert review, and final modifications. This tool (located in the Supplemental Materials ) is provided to assist practitioners in assessing risk and determining how risk can be reduced and to offer references to published research to provide practitioners with more information.

General Discussion

There is an ethical need for tools that offer guidance and resources to support the growing number of behavior analysts in the field (Geiger et al., 2010 ; LeBlanc et al., 2016 ; Newhouse-Oisten et al., 2017 ). However, sometimes decisions are complex, requiring multiple considerations—especially when one decision impacts the context of all other decisions in an interactive decision-making process. In such cases, domains of consideration may interact with one another in unique ways. For instance, our four domains all potentially impact risk in an FA (clinical experience, behavior intensity, support staff, and environmental setting) but likely do so in an interactive fashion. High risk in one domain might interact with low or moderate risk in another domain to create unique risk situations. In this case, the four domains, each with six categories in the continuum, would lead to 1,296 different possible combinations of risk factors. Unfortunately, a decision tree would not be a feasible format to display these options, nor could it easily be presented on a journal page. Thus, we created this interactive tool so that all 1,296 possible combinations could be taken into account in an efficient and useful manner.

Code 4.05 of the BACB® Professional and Ethical Compliance Code for Behavior Analysts specifies, “to the extent possible, a risk-benefit analysis should be conducted on the procedures to be implemented to reach the objective” (BACB, 2014 ). Evaluating whether assessments or treatments could adversely impact a client can be done at any point throughout the therapeutic relationship and should conclude with a course of action where the benefits outweigh the risks (BACB, 2014 , p. 24). Unfortunately, the language surrounding how to conduct a risk-benefit analysis or what form a risk-benefit analysis should take is rather vague in our literature. Each assessment or treatment procedure can produce different probabilities of success, different amounts of time to take effect, different levels of restrictiveness, and different impacts on quality of life, which make the selection process for assessments and treatments a delicate tightrope on which to balance (Axelrod, Spreat, Berry, & Moyer, 1993 ). Code 3.01(a) specifies that functional assessments are prerequisites to behavior change programs and the type of assessment depends upon the needs of our clients, as well as their “consent, environmental parameters, and other contextual variables” (BACB, 2014 ). Because the functional assessment process is one of the largest differentiating factors of behavior-analytic practice from other psychological professions, it is essential that the safest and most efficacious assessment be selected for our clients’ needs.