An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

The rough guide to systematic reviews and meta-analyses

G biondi-zoccai, m lotrionte.

- Author information

- Copyright and License information

Giuseppe Biondi-Zoccai, MD Division of Cardiology, University of Modena and Reggio Emilia, Via Del Pozzo, 71 - 41124 Modena, Italy; E-mail: [email protected]

Corresponding author.

This is an open-access article distributed under the terms of the Creative Commons Attribution Non-commercial License 3.0, which permits use, distribution, and reproduction in any medium, provided the original work is properly cited, the use is non commercial and is otherwise in compliance with the license. See: http://creativecommons.org/licenses/by-nc/3.0/ and http://creativecommons.org/licenses/by-nc/3.0/legalcode .

The hierarchy of evidence based medicine postulates that systematic reviews of homogenous randomized trials represent one of the uppermost levels of clinical evidence. Indeed, the current overwhelming role of systematic reviews, meta-analyses and meta-regression analyses in evidence based heath care calls for a thorough knowledge of the pros and cons of these study designs, even for the busy clinician. Despite this sore need, few succinct but thorough resources are available to guide users or would-be authors of systematic reviews. This article provides a rough guide to reading and, summarily, designing and conducting systematic reviews and meta-analyses

Keywords: meta-analysis, meta-regression, systematic review

Introduction

"I like to think of the meta-analytic process

as similar to being in a helicopter.

On the ground individual trees

are visible with high resolution.

This resolution diminishes as the helicopter

rises, and its place we begin

to see patterns not visible from the ground"

Ingram Olkin

Systematic reviews and meta-analyses are being used more extensively by researchers and practitioners, thanks to the appeal of a single piece of literature that is immediately able to summarize diverse data on a specific topic [ 1 , 2 ]. They have been established as the most quoted and read article types, even toppling randomized clinical trials, and thus they are likely to play a progressively even greater role in the future of medicine [ 3 , 4 ]. In addition, they are often published in the most prestigious international peer-reviewed journals, reaching thousands of physicians and researchers worldwide.

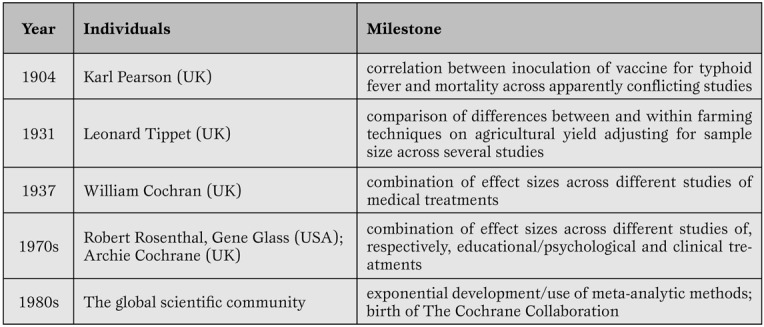

As with any other analytical and research tool with a long-standing history ( Table 1 ), systematic reviews and meta-analyses, despite their major strengths, are well known for several potential major weaknesses.

Key milestones in systematic review and meta-analysis development.

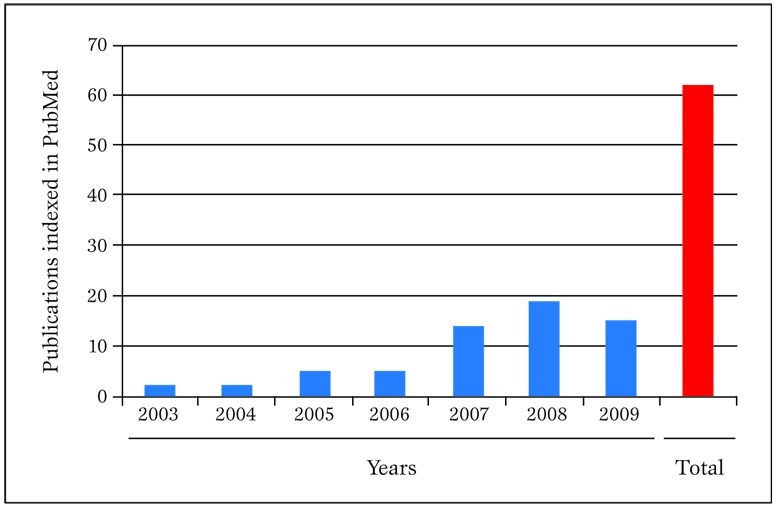

The aim of this review is to provide a concise but sound framework for the critical reading of systematic reviews and meta-analyses and, summarily, their design and conduct, stemming from our extensive experience with this type of research method ( Figure 1 ).

Publications in PubMed authored in the last few years by our research group concerning meta-analytic topics. Pubmed was searched on 30 March 2010 with the following strategy: "(biondi-zoccai OR Zoccai) AND (meta-analys* OR metaanalys* OR metaregress* OR "meta-regression")".

Definitions

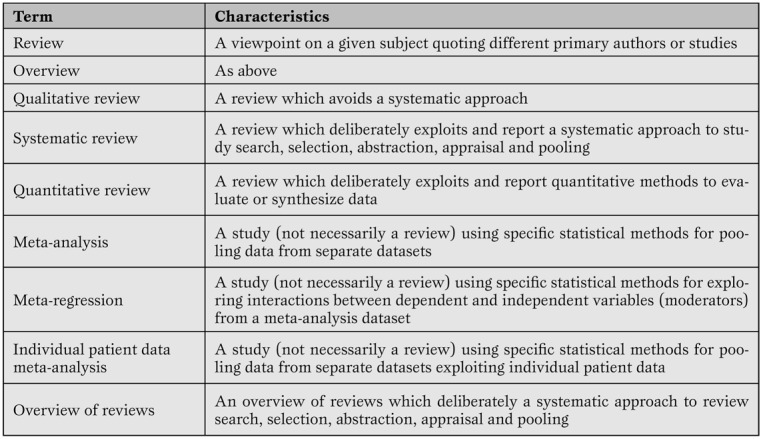

A systematic review is a viewpoint focusing on a specific clinical problem, being it therapeutic, diagnostic or prognostic ( Table 2 ) [ 1 , 5 ].

Minimal glossary pertinent to systematic reviews and meta-analyses.

The term systematic means that all the steps underlying the reviewing process are explicitly and clearly defined, and may be reproduced independently by other researchers. Thus, a formal set of methods is applied to study search (i.e. to the extensive search of primary/original studies), study selection, study appraisal, data abstraction and, when appropriate, data pooling according to statistical methods. Indeed, the term meta-analysis refers to a statistical method used to combine results from several different primary studies in order to provide more precise and valid results. Thus, not all systematic reviews include a meta-analysis, as not all topics are suitable for sound and robust data pooling. At the same time, meta-analysis can be conducted outside the realm of a systematic review (e.g. in the absence of extensive and thorough literature searches), but in such cases results of the meta-analytic efforts should be best viewed as hypothesis-generating only. This depends mainly on the fact that meta-analysis outside the framework of a systematic review has a major risk of publication bias.

Systematic reviews (especially when including meta-analytic pooling of quantitative data) have several unique strengths [ 1 , 5 ]. Specifically, they exploit systematic literature searches enabling the retrieval of the whole body of evidence pertaining to a specific clinical question.

Their standardized methods for search, evaluation and selection of primary studies enable reproducibility and an objective stance. Individual primary studies undergo a thorough evaluation for internal validity, together with the identification of the risk for bias All too often, systematic reviews hold their greater strength precisely in their ability to pinpoint weaknesses and fallacies in apparently sound primary studies [ 6 ].

Quantitative synthesis by means of meta-analysis also substantially increases statistical power, and yields narrower confidence intervals for statistical inference. The assessment of the effect of an intervention (exposure or diagnostic test) across different settings and times provides estimates and inferences with much greater external validity. The larger sample sizes often achieved by systematic reviews may even offer ample room for testing post-hoc hypotheses or exploring the effects in selected subgroups [ 7 ]. Clinical and statistical variability (i.e. heterogeneity and inconsistency) may be exploited by advanced statistical methods such as meta-regression, possibly offering the opportunity to test novel and hitherto unprecedented hypotheses [ 8 ]. Finally, meta-regression methods can be used to perform adjusted indirect comparisons or network meta-analyses [ 9 ].

Limitations

Drawbacks of systematic reviews and meta-analyses are also substantial, and should never be dismissed [ 1 ]. Since the first critique of being “an exercise in mega-silliness” and inappropriately "mixing apples and oranges" [ 10 ], there has been ongoing debate on the most correct approach to choose when meta-analytic pooling should be pursued (e.g. in case of statistical homogeneity and consistency) and when, conversely, the reviewer should refrain from meta-analysis (e.g. in case of severe statistical heterogeneity [as testified by p values <0.10 at χ 2 test] or significant statistical inconsistency [as testified by I 2 values>50%]) [ 11 ].

Whereas Canadian authors suggest that systematic reviews and meta-analyses from homogenous randomized controlled trials represent the apex of the evidence-based medicine pyramid (discounting for the role of n of 1 randomized trials) [ 12 ], others maintain that very large and simple randomized clinical trials offer several premium features, and should always be preferred, when available, to systematic reviews [ 13 ].

It is also all too common to retrieve only a few studies which focus on a given clinical topic, or otherwise studies may be found, but of such low quality, that including or even discussing them in the setting of a systematic review may appear misleading. Indeed, in such cases the meta-analysis itself can be considered misleading. Nonetheless, key insights may be gained in these cases by exploring sources of heterogeneity, stratified analyses, and meta-regressions. This drawback is strictly associated with the major threat to meta-analysis validity called the small study effect (also, albeit inappropriately, called small study bias or publication bias) [ 1 ]. Indeed, it is common to recognize, especially in large datasets, that small primary studies are more likely to be reported, published and quoted if their results are significant. Conversely, small non-significant studies often fail to reach publication or dissemination, and may thus be very easily missed, even after thorough literature searches. Combining results from these “biased” small studies with those of larger studies (which are usually published even if negative or non-significant) may inappropriately deviate summary effect estimates away from the true value. Unfortunately, despite the availability of several graphical and analytical tests [ 14 ] small study effects (which actually encompass publication bias) are potentially always present in a systematic review and should never be disregarded.

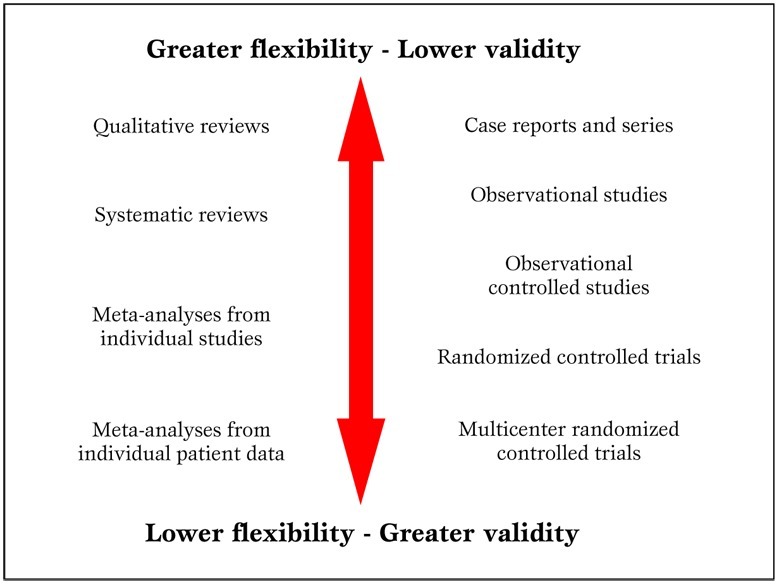

In addition, in the ongoing worldwide research effort, it is all too common for reviewers to focus only on English language studies, and thus unduly restricting their search and excluding potentially important works (e.g. from China or Japan). Another common critique is that systematic reviews and meta-analyses are not original research ( Figure 2 )

Parallel hierarchy of scientific studies in clinical research. Modified from Biondi-Zoccai et al. (2).

The reader is left free to form independently his informed opinion on this specific issue. Nonetheless, the main meter to judge a systematic review should be its novelty and usefulness for the very same reader, not whether it appears as original or secondary research [ 2 ].

Finally, a burning issue is whether results from large systematic reviews and meta-analyses can ever be applied to the single individual under our care. This question cannot be answered once and for all, and judgment should always be employed when considering the application of meta-analytic results to a specific patient. Unless proven otherwise by a significant test of interaction, all patients should be considered likely to similarly benefit from a specific treatment or diagnostic strategy [ 12 ].

Appraising primary studies, systematic reviews and meta-analyses

Unfortunately publication of a systematic review in a peer-reviewed journal is not definitive evidence of its internal validity and usefulness for the clinical practitioner or researcher [ 15 ]. Peer-review is not very accomplished in judging or improving the quality of scientific reviews, and many examples of bad or unsuccessful peer-reviewing efforts can be easily found. However, just as ”democracy is the worst form of government except all those other forms that have been tried” (Sir Winston Churchill), peer-review is the “worst” method used to evaluate scientific research except all other methods that have been tried so far. This applies to all clinical research products in general and so also applies to systematic reviews and meta-analyses. Thus, provided that meta-analyses are accurately and thoroughly reported, the burden of quality appraisal lies largely, as usual, in the eye of the beholder (i.e. the reader).

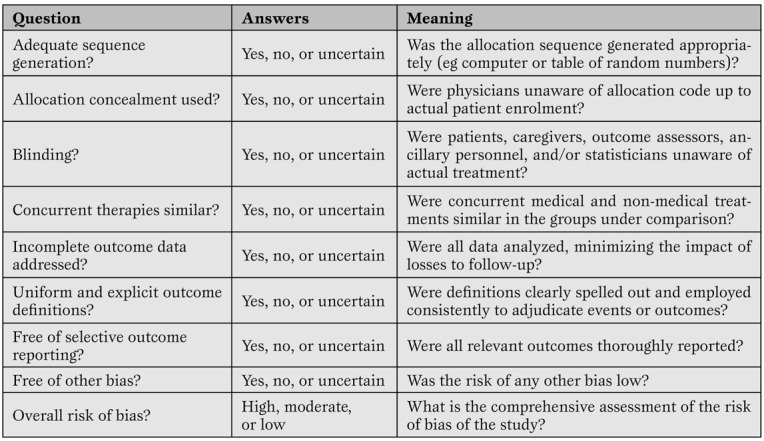

Assessment of primary research studies as well as systematic reviews and meta-analyses should be based on their internal validity and then, provided it is reasonably adequate, on their results and external validity [ 12 ]. Whereas interpretation of results and external validity of any research endeavor depends on the specific context of application, and is thus best left open to the individual judgment of the reader or decision-maker, internal validity can be evaluated in a rather structured and validated way. Recent guidance on the appraisal of the risk of bias in primary research studies within the context of a systematic review has been provided by The Cochrane Collaboration, and includes a separate assessment of the risk of selection, performance, attrition and adjudication bias ( Table 3 ) [ 16 ]

A modified version of The Cochrane Collaboration risk of bias assessment tool for the appraisal of primary studies.(16)*

Other valid and complementary approaches, targeted for specific study designs, have been proposed by advocates of evidence-based medicine methods, and include the Jadad score, the Delphi list, and the Megens-Harris list [ 12 ]. Nonetheless, even external validity can be formally evaluated by focusing on the population included, the control group, and result interpretation. Finally, established statistical criteria are available to determine whether a given intervention is effective and similar explicit criteria can inform on the presence of clinical significance.

The quality of a systematic review and meta-analysis depends on several factors, in particular the quality of the primary pooled studies.

Nonetheless, reporting quality (e.g. compliance with current guidelines on drafting and reporting of a meta-analysis by the Preferred Reporting Items for Systematic reviews and Meta-Analyses [PRISMA] or Meta-analysis Of Observational Studies in Epidemiology [MOOSE] statements) should be clearly distinguished by internal validity [ 17 , 18 ]. This can be low even in well reported reviews, whereas it is generally difficult to judge as highly valid a poorly reported systematic review and meta-analysis. The assessment of the internal validity of a review is quite complex and based on several assumptions, including study search and appraisal, methods for data pooling, and approaches to interpretation of study findings.

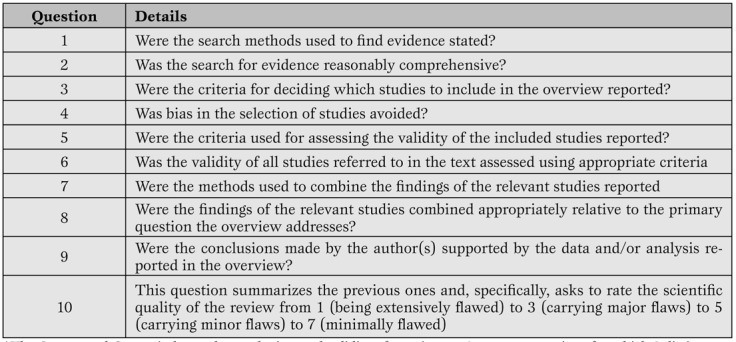

However, useful guidance was provided by Oxman and Guyatt with their well validated instrument ( Table 4 ) [ 19 ].

Oxman and Guyatt index for the appraisal of reviews. (19)*

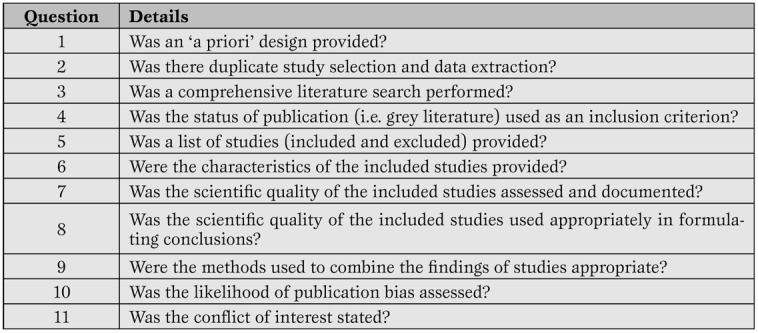

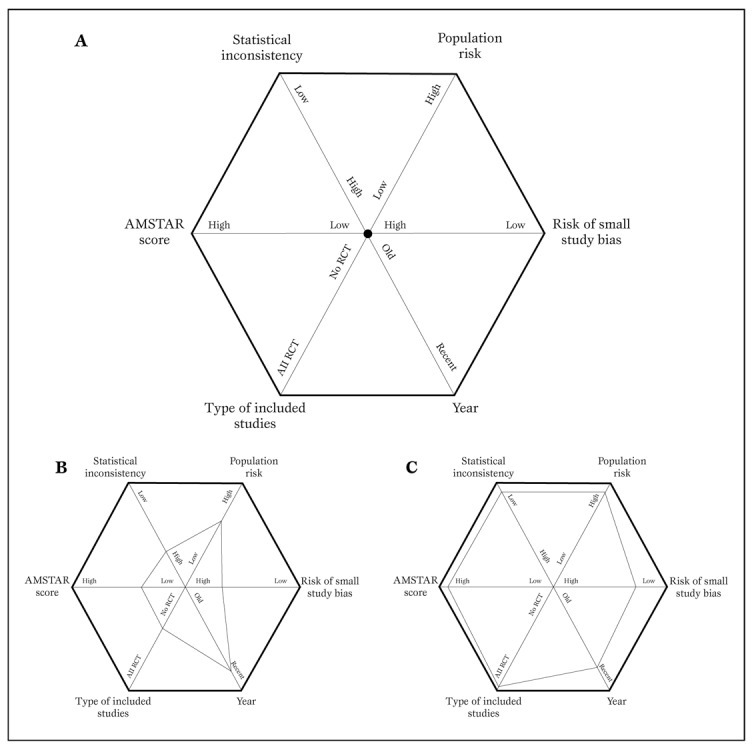

More recently, other investigators have suggested other tools for the evaluation of systematic reviews, such as the A Measurement Tool to Assess Systematic Reviews (AMSTAR), and the Veritas plot, which await further validation ( Table 5 , Figure 3 ) [ 20 , 21 , 22 ].

The AMSTAR tool for the appraisal of systematic reviews. (20-21)*

Typical diagram used to generate a Veritas plot (panel A) (22).

Using this tool, a low quality meta-analysis will be represented by a hexagon with a smaller area (panel B), whereas a high quality meta-analysis will be shown as a hexagon with a larger area (panel C).

For those busy critical care physicians wishing for a quicker approach to appraise systematic reviews, a simple two-step approach can be proposed. This is a simplification of the evidence-based medicine approach for the evaluation of sources of clinical evidence, but is nonetheless quite helpful [ 12 ]. Evidence-based medicine is “the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients” [ 12 ]. It must also be stressed that “the practice of evidence-based medicine requires integration of individual clinical expertise and patient references with the best available external clinical evidence from systematic search” [ 12 ]. Systematic reviews and meta-analyses, if well conducted and reported, help us in reducing our efforts in looking for, evaluating, and summarizing the evidence.

But the burden of deciding what to do with the evidence obtained for the care of our individual patient remains ours.

Thus, the first step in appraising a systematic review and meta-analysis is to try and find an answer to the question: can I trust it? In other words, is this review internally valid, does it provide a precise and largely unbiased answer to its scientific question? Providing a definitive assessment of the internal validity of a systematic review is not a simple task, but largely depends on the methods employed and reported regarding study search, selection, abstraction, appraisal and, if appropriate, the study pooling. Even if we can conclude that a given meta-analysis is internally valid, we still have to face the second step in its evaluation. This focuses on the external validity of the study. In other words, can I apply the review results to the case I am facing or will shortly face? More basically it answers the question: so what? Decisions on external validity are highly subjective and may change depending on the clinical, historical, logistical, cultural or ethical context of the evaluator. Nonetheless, systematic reviews and meta-analyses can improve our appraisal of the external validity of any given clinical intervention, by suggesting an overall clinical efficacy (or lack of it).

It is clear that the assessment of the internal validity, and even more importantly the external validity, of any research endeavor, is highly subjective, and thus we leave ample room for the reader to enjoy and appraise them on his or her own.

The only issue that is worth being further stressed is that only collective and constructive, but critical post-publication evaluation of scientific studies can put and maintain them into the appropriate context for their correct and practical exploitation by the clinical researcher and the clinical practitioner.

Systematic reviews and meta-analyses: do it yourself

Even those not strictly committed to conduct a systematic review may obtain further insights into this clinical research method by understanding the key steps involved in the design, conduct and interpretation of a systematic review [ 5 ].

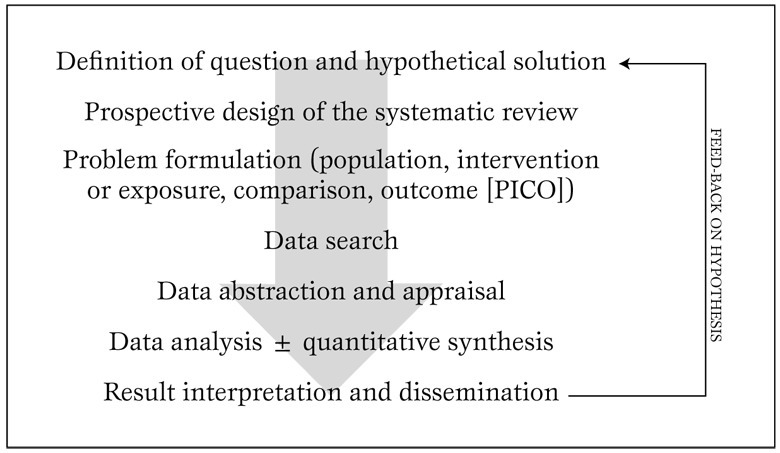

Briefly, a systematic review should always stem from a specific clinical question. Even if the experienced reviewer can probably informally guess the answer to this question the goal of the systematic review will be to confirm or disprove such hypothesis in a formal and structured way. With this goal in mind, the review should be designed as prospectively and in as much in detail as possible, to avoid conscious or unconscious manipulations of methods or data ( Figure 4 ).

Typical algorithm for the design and conduct of a systematic review. Modified from Biondi-Zoccai et al. (5).

The next steps are very important, and define the boundaries of the reviewing effort. Specifically, the reviewer should spell out the population of interest, the intervention or exposure to be appraised, the comparison(s) or comparator(s), and the outcome(s). The acronym PICO is often used to remember this approach. As an example, we could be interested in conducting a systematic review focusing on a population (P) of diabetics with coronary artery disease undergoing coronary artery bypass grafting, with the intervention (I) of interest being the administration of bivaridudin as anticoagulant, the comparator (C) being unfractioned heparin, and the outcomes (O) defined as in-hospital rates of death, myocardial infarction, stroke, or major bleeding (including bleeding needing repeat surgery).

After such preliminary steps, the actual review begins with a thorough and extensive search, encompassing several databases (not only MEDLINE/PubMed) with the help of library personnel experienced in literature searches, preferably also including conference abstracts and bibliographies of pertinent articles and reviews. When a list of potentially pertinent citations has been retrieved, these should be assessed and included/excluded based on criteria stemming directly from the PICO approach used to define the clinical question. Study appraisal also includes a formal evaluation of study validity and risk of bias of primary studies, whereas data abstraction, generally performed by at least two independent reviewers with divergences resolved after consensus, provides the quantitative data which will eventually be pooled with meta-analysis [ 16 ].

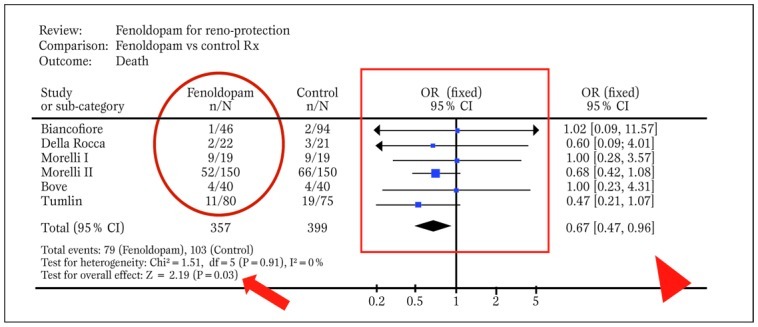

Indeed, provided that studies are relatively homogeneous and consistent, meta-analytic methods are employed to combine effect estimates from single studies into a unique summary effect estimate, with corresponding p values and confidence intervals for the effect ( Figure 5 )

Typical forest plot generated by RevMan from a systematic review with meta-analytic pooling of dichotomous outcomes (df=degrees of freedom; E=expected cases; O=observed cases; OR=odds ratio). The solid oval highlights event counts in one of the groups under comparison, the solid box shows graphically individual and pooled point effect estimates with 95% confidence intervals, the arrowhead indicates the exact pooled point effect estimate with 95% confidence intervals (CI), the arrow shows the p value for effect, and the dashed oval highlights p value for statistical eterogeneity and measure of statistical inconsistency (I2). Modified from Landoni et al. (30).

In many cases results may lead reviewers to go back to the original research question and revise their working hypothesis. The last step relies on the interpretation and dissemination (possibly through publication in a peer-reviewed journal) of the results.

More advanced analytical issues

Unless extensively powered low event rates may often be found in primary research studies (e.g. with>1000 patients enrolled or with selective recruitment of very high-risk subjects). This may lead to null counts in one or more of the groups undergoing comparison in a controlled trial, generating severe computational hurdles. Indeed, most statistical methods used for meta-analytic pooling require that at least one event has occurred in each study group.

When this is not the case in one or more of the groups under comparison, bias may be introduced with the common practice of adding 0.25 or 0.50 to each group without events [ 23 ]. On top of this, when no event has occurred in any group, comparisons are more challenging and data from such an underpowered studies cannot be pooled with standard meta-analytic methods, as variance of the effect estimate approaches infinity. Nonetheless, other approaches (e.g. risk difference, continuity correction or Peto method) can still be used in case of total zero event trials.

Even when all groups undergoing comparison in a specific study have one or more events, the risk of biased estimates and alpha error (i.e. the risk of erroneously dismissing a null hypothesis despite it being true) may be present [ 1 ].

Indeed, minor differences in populations with few and rare events may provide nominally significant results (e.g. p=0.048) which however appear quite unstable. In such cases, we recommend reliance on the combined use of p values and 95% confidence intervals, or even making use of 99% confidence intervals. In other cases, a useful rule of thumb is to trust only meta-analyses reporting on at least 100 pooled events per group under comparison.

The risk of erroneously accepting a null hypothesis despite it being false (i.e. the beta error) is also common in systematic reviews and meta-analyses, especially when they include few studies with low event counts. This lack of statistical power (defined as 1-beta) is even more common with meta-regression analyses, which are usually underpowered because of few included studies and regression to the mean [ 7 ].

Surrogates may provide an important contribution to clinical research design, by increasing statistical power and offering insights in more than one clinical dimension. However, surrogate end-points (e.g. >25% increase in serum creatinine from baseline values to identify subclinical renal injury) may be less clinically relevant than hard clinical end-points (death or permanent need for hemodialysis) [ 12 ].

Usually, only surrogates which have a direct impact on patient well being and are independently associated with hard clinical end-points should be accepted for the design of clinical research studies. In any case, a study reaching significance based on surrogate end-points alone, but missing significance on analysis of hard end-points should be considered as hypothesis-generating or, at best, underpowered.

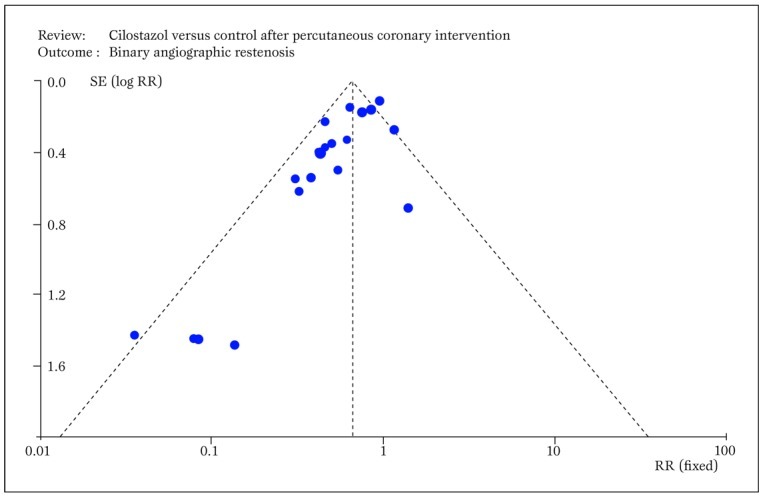

Small study bias always potentially threatens the results of a systematic review, as this type of confounding applies to all clinical topics and research study designs ( Figure 6 ) [ 24 ]

Typical funnel plot generated by RevMan showing small study bias, ie the asymmetric distribution of effect sizes in function of study precision, with selective publication of only positive small sample studies (RR=relative risk; SE=standard error). Modified from Biondi-Zoccai et al. (24).

Although this bias may be less significant in more recent and well financed drug or device studies (e.g. fenoldopam), in older or less well funded studies publication bias may profoundly undermine the results of a systematic review.

This has been all too evident in studies examining the role of acetylcysteine for the prevention of contrast-associated nephropathy [ 25 ], but is also obvious in other commonly prescribed agents. Another major threat to the validity of a systematic review, as to any other research endeavor, lies in conflicts of interest and study funding. It is well known that reviewers with underlying financial conflicts of interest are more likely to conclude in favor of the intervention benefiting the source of financial gains [ 26 ].

Whether these facts should lead to a more critical reading of their work or a comprehensive re-evaluation of their whole research project is best left at the readers’ discretion, but this should also take into account the overall internal validity (e.g. blinding of patients, physicians, adjudicators, and analysts) of the work.

Conclusions

Systematic reviews and meta-analyses offer powerful methods to evaluate the clinical effects of health interventions, especially when directly applied to real world clinical practice (such as in the Best Evidence Topic [BET] approach) [ 27 ].

More collaborative efforts are however required to design, conduct and disseminate individual patient data meta-analyses in an unbiased and rigorous manner [ 28 , 29 ].

Source of Support Nil.

Conflict of interest None declared.

Cite as: Biondi-Zoccai G, Lotrionte M, Landoni G, Modena MG. The rough guide to systematic reviews and meta-analyses. HSR Proceedings in Intensive Care and Cardiovascular Anesthesia 2011; 3(3): 161-173

- Egger M, Smith GD, Altman DG. Systematic reviews in health care: meta-analysis in context. BMJ Publishing Group, London. 2001;2nd ed [ Google Scholar ]

- Biondi-Zoccai GG, Agostoni P, Abbate A. Parallel hierarchy of scientific studies in cardiovascular medicine. Ital Heart J. 2003;4:819–820. [ PubMed ] [ Google Scholar ]

- Patsopoulos NA, Analatos AA, Ioannidis JP. Relative citation impact of various study designs in the health sciences. JAMA. 2005;293:2362–2366. doi: 10.1001/jama.293.19.2362. [ DOI ] [ PubMed ] [ Google Scholar ]

- Glasziou P, Djulbegovic B, Burls A. Are systematic reviews more cost-effective than randomised trials? Lancet. 2006;367:2057–2058. doi: 10.1016/S0140-6736(06)68919-8. [ DOI ] [ PubMed ] [ Google Scholar ]

- Biondi-Zoccai GG, Testa L, Agostoni P. A practical algorithm for systematic reviews in cardiovascular medicine. Ital Heart J. 2004;5:486–487. [ PubMed ] [ Google Scholar ]

- Lau J, Ioannidis JP, Schmid CH. Summing up evidence: one answer is not always enough. Lancet. 1998;351:123–127. doi: 10.1016/S0140-6736(97)08468-7. [ DOI ] [ PubMed ] [ Google Scholar ]

- Thompson SG, Higgins JP. How should meta-regression analyses undertaken and interpreted? Stat Med. 2002;21:1559–1573. doi: 10.1002/sim.1187. [ DOI ] [ PubMed ] [ Google Scholar ]

- Biondi-Zoccai GG, Abbate A, Agostoni P. et al. Long-term benefits of an early invasive management in acute coronary syndromes depend on intracoronary stenting and aggressive antiplatelet treatment: a metaregression. Am Heart J. 2005;149:504–511. doi: 10.1016/j.ahj.2004.10.026. [ DOI ] [ PubMed ] [ Google Scholar ]

- Bucher HC, Guyatt GH, Griffith LE, Walter SD. The results of direct and indirect treatment comparisons in meta-analysis of randomized controlled trials. J Clin Epidemiol. 1997;50:683–691. doi: 10.1016/s0895-4356(97)00049-8. [ DOI ] [ PubMed ] [ Google Scholar ]

- Glass G. Primary, secondary and meta-analysis of research. Educ Res. 1976;5:3–8. [ Google Scholar ]

- Higgins JP, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327:557–560. doi: 10.1136/bmj.327.7414.557. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Guyatt G, Rennie D, Meade M, Cook D. Users\' guides to the medical literature. A manual for evidence-based clinical practice. AMA Press, Chicago. 2002 [ Google Scholar ]

- Cappelleri JC, Ioannidis JP, Schmid CH. et al. Large trials vs meta-analysis of smaller trials: how do their results compare? JAMA. 1996;276:1332–1338. [ PubMed ] [ Google Scholar ]

- Peters J L, Sutton AJ, Jones DR. et al. Comparison of two methods to detect publication bias in meta-analysis. JAMA. 2006;295:676–680. doi: 10.1001/jama.295.6.676. [ DOI ] [ PubMed ] [ Google Scholar ]

- Opthof T, Coronel R, Janse MJ. The significance of the peer review process against the background of bias: priority ratings of reviewers and editors and the prediction of citation, the role of geographical bias. Cardiovasc Res. 2002;56:339–346. doi: 10.1016/s0008-6363(02)00712-5. [ DOI ] [ PubMed ] [ Google Scholar ]

- Higgins JPT, Green S. Cochrane Handbook for Systematic Reviews of Interventions. The Cochrane Collaboration, Oxford. 2008 [ Google Scholar ]

- Liberati A, Altman DG, Tetzlaff J. et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339:2700. doi: 10.1136/bmj.b2700. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Stroup DF, Berlin JA, Morton SC. et al. Meta-analysis of observational studies in epidemiology: a proposal for reporting. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group. JAMA. 2000;283:2008–2012. doi: 10.1001/jama.283.15.2008. [ DOI ] [ PubMed ] [ Google Scholar ]

- Oxman AD, Guyatt GH. Validation of an index of the quality of review articles. J Clin Epidemiol. 1991;44:1271–1278. doi: 10.1016/0895-4356(91)90160-b. [ DOI ] [ PubMed ] [ Google Scholar ]

- Shea BJ, Grimshaw JM, Wells GA. et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10. doi: 10.1186/1471-2288-7-10. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Shea BJ, Bouter LM, Peterson J. et al. External validation of a measurement tool to assess systematic reviews (AMSTAR) PLoS One. 2007;2:1350. doi: 10.1371/journal.pone.0001350. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Panesar SS, Rao C, Vecht JA. et al. Development of the Veritas plot and its application in cardiac surgery: an evidence-synthesis graphic tool for the clinician to assess multiple meta-analyses reporting on a common outcome. Can J Surg. 2009;52:137–145. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Golder S, Loke Y, McIntosh H. Room for improvement? A survey of the methods used in systematic reviews of adverse effects. BMC Med Res Methodol. 2006;6:3. doi: 10.1186/1471-2288-6-3. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Biondi-Zoccai GG, Lotrionte M, Anselmino M. et al. Systematic review and meta-analysis of randomized clinical trials appraising the impact of cilostazol after percutaneous coronary intervention. Am Heart J. 2008;155:1081–1089. doi: 10.1016/j.ahj.2007.12.024. [ DOI ] [ PubMed ] [ Google Scholar ]

- Biondi-Zoccai GG, Lotrionte M, Abbate A. et al. Compliance with QUOROM and quality of reporting of overlapping meta-analyses on the role of acetylcysteine in the prevention of contrast associated nephropathy: case study. BMJ. 2006;332:202–209. doi: 10.1136/bmj.38693.516782.7C. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Barnes DE, Bero LA. Why review articles on the health effects of passive smoking reach different conclusions. JAMA. 1998;297:1566–1570. doi: 10.1001/jama.279.19.1566. [ DOI ] [ PubMed ] [ Google Scholar ]

- Dunning J, Prendergast B, Mackway-Jones K. Towards evidence-based medicine in cardiothoracic surgery: best BETS. Interact Cardiovasc Thorac Surg. 2003;2:405–409. doi: 10.1016/S1569-9293(03)00191-9. [ DOI ] [ PubMed ] [ Google Scholar ]

- De Luca G, Gibson CM, Bellandi F. et al. Early glycoprotein IIb-IIIa inhibitors in primary angioplasty (EGYPT) cooperation: an individual patient data meta-analysis. Heart. 2008;94:1548–1558. doi: 10.1136/hrt.2008.141648. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Burzotta F, De Vita M, Gu YL. et al. Clinical impact of thrombectomy in acute ST-elevation myocardial infarction: an individual patient-data pooled analysis of 11 trials. Eur Heart J. 2009;30:2193–2203. doi: 10.1093/eurheartj/ehp348. [ DOI ] [ PubMed ] [ Google Scholar ]

- Landoni G, Biondi-Zoccai GG, Tumlin JA. et al. Beneficial impact of fenoldopam in critically ill patients with or at risk for acute renal failure: a meta-analysis of randomized clinical trials. Am J Kidney Dis. 2007;49:56–68. doi: 10.1053/j.ajkd.2006.10.013. [ DOI ] [ PubMed ] [ Google Scholar ]

- PDF (1.9 MB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

- Bodleian Libraries

- Oxford LibGuides

- Systematic Reviews and Evidence Syntheses

- Planning a Review

Systematic Reviews and Evidence Syntheses: Planning a Review

- Help and training

- Searching for studies

Planning your review

The planning stage of a systematic review is essential in avoiding the 5 most common mistakes in conducting systematic reviews and increasing the likelihood of future publication. The steps outlined on this page are often conducted concurrently.

- 5 most common mistakes in conducting systematic reviews

Formulating a question

- A well-built clinical question

A well-built clinical question is the cornerstone of evidence-based health care and has been incorporated into the production of systematic reviews. Formulating a question is the first and most essential step in preparing a protocol and will inform the whole review process, including:

- Choosing the most appropriate review method

- Providing the focus for the scoping searches

- Identifying the key concepts that will structure the search strategy

- Establishing the inclusion / exclusion criteria for study selection

- Structuring the data extraction and analysis methods

A question formulation tool or framework can be useful in breaking down a topic area into its key components. There are different tools for different types of review

- PICO = Population, Intervention, Comparison, Outcome

- PECO = Population, Exposure, Comparison, Outcome

- PIRT = Population, Index Test, Reference Test, Target Condition

- PCC = Population, Concept, Context

- SPiDER = Sample, Phenomenon of Interest, Design, Evaluation, Research Type

You should choose the most appropriate tool for your type of question. If your topic isn’t easily structured using one of these tools, you can remove elements or adapt the headings to make it work for your question. The key aspect of formulating a question is that you can take a topic and separate it into its component parts. Example Questions Here are 2 examples of how we might break down 2 different questions on the same topic of delayed antibiotic prescription: 1. How do delayed antibiotic prescriptions for respiratory infections affect patient & service outcomes compared to immediate /no prescription? Population = Patients with respiratory infections Intervention = Delayed antibiotic prescription Comparison = Immediate or no prescription Outcomes = time to recovery, repeat GP appointment, emergency hospitalisation, patient satisfaction... 2. What are the barriers and facilitators to implementing delayed antibiotic prescription to patients with respiratory infections attending primary care? Population = Patients with respiratory infections Concept = Barriers / Facilitators to implementing delayed antibiotic prescription Context = Primary Care

Scoping searches

Once you have defined your question you can start the process by conducting scoping searches. These are often simple searches for the key elements of your question. Using the example question above, we might search for:

Delayed prescribing AND antibiotics AND respiratory tract infections

Step 1: Locating existing systematic reviews

Identifying existing reviews or review protocols has three main purposes:

To verify that your question hasn't already been answered – published review

To ascertain whether researchers are in the process of conducting a review on the same question – review protocol

To identify related systematic reviews that must be accessed so you can review the reference lists for identifying relevant primary studies.

Useful databases for identifying systematic reviews in health care

- Cochrane Database of Systematic Reviews (CDSR)

- Epistemonikos

- Pubmed Search PubMed and filter the results for Article Type – Systematic Review

- PROSPERO: International prospective register of systematic reviews - the protocols of systematic reviews in health care are often registered on PROSPERO. For further suggestions on where to search, look at the next section ‘Developing and registering your protocol’

Step 2: Locating key studies

If you have existing knowledge of the topic area, you will already be aware of key studies that would provide background information for the review or be included in your final analysis. If not, you might want to conduct initial searches of a key database for your subject area (e.g. PubMed/Medline or Embase for healthcare) to inform the development of your protocol and search strategies. This will help with:

Refining the scope of your review – if you have too many or too few results on your initial searches, you might want to look again at your question

Informing the development of a search strategy – by reading relevant articles you’ll develop an understanding of the variability in terminology which will need to be incorporated into your final search

Writing the contextual / background information for your protocol

Developing and registering your protocol

Once you've formulated your question and established the need for a new review on your topic, you will need to start developing a protocol to guide the conduct of your review. This will cover inclusion/exclusion criteria, screening methods, risk of bias assessment and data analysis.

PRISMA-P provides guidance for the information that needs to be reported in a protocol for a systematic review. Systematic review protocol templates are available from PROSPERO . Scoping review templates are available from the Joanna Briggs Institute and OSF websites.

It is good practice to prospectively register your protocol and in many cases a requirement for future publication of the review. You may want to explore further why prospective registration of systematic reviews make sense .

Traditionally, PROSPERO has been the main repository for prospectively registering systematic review protocols in health care. In recent times, the development of alternative evidence reviews (e.g. scoping reviews) and the increase in preprint archives and collaborative open research platforms has increased the options available to researchers for prospective registration. In this case, you may need to further explore where to prospectively register a systematic review

Putting your team together

As the lead on a systematic review project, you will need to assemble a team of people around you that can provide methodological and topic support for your review. The size of the team around you will depend on the complexity of the question and potential volume of research that will need to be screened, quality assessed and synthesised. You will need people who can:

Develop, peer review and conduct searches

Double up on screening studies, extracting data and quality assessment

Provide methodological expertise in statistical, thematic or realist analysis

Supply topic / clinical expertise

Further Reading

- Muka T, Glisic M, Milic J, Verhoog S, Bohlius J, Bramer W, et al. A 24-step guide on how to design, conduct, and successfully publish a systematic review and meta-analysis in medical research. Eur J Epidemiol. 2020;35(1):49-60.

- Gough, David (David A.), Sandy Oliver, and James Thomas, eds. An Introduction to Systematic Reviews. 2nd edition. Los Angeles ; SAGE, 2017. Print.

- Higgins JP, Thomas J, Chandler J, Cumpston M, Li T, Page M, et al. Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023): Cochrane; 2023 [Available from: www.training.cochrane.org/handbook.

- << Previous: Home

- Next: Help and training >>

- Last Updated: Oct 24, 2024 2:58 PM

- URL: https://libguides.bodleian.ox.ac.uk/systematic-reviews

Website feedback

Accessibility Statement - https://visit.bodleian.ox.ac.uk/accessibility

Google Analytics - Bodleian Libraries use Google Analytics cookies on this web site. Google Analytics anonymously tracks individual visitor behaviour on this web site so that we can see how LibGuides is being used. We only use this information for monitoring and improving our websites and content for the benefit of our users (you). You can opt out of Google Analytics cookies completely (from all websites) by visiting https://tools.google.com/dlpage/gaoptout

© Bodleian Libraries 2021. Licensed under a Creative Commons Attribution 4.0 International Licence

Systematic Reviews and Meta Analysis

- Getting Started

- Guides and Standards

- Review Protocols

- Databases and Sources

- Randomized Controlled Trials

- Controlled Clinical Trials

- Observational Designs

- Tests of Diagnostic Accuracy

- Software and Tools

- Where do I get all those articles?

- Collaborations

- EPI 233/528

- Countway Mediated Search

- Risk of Bias (RoB)

Systematic review Q & A

What is a systematic review.

A systematic review is guided filtering and synthesis of all available evidence addressing a specific, focused research question, generally about a specific intervention or exposure. The use of standardized, systematic methods and pre-selected eligibility criteria reduce the risk of bias in identifying, selecting and analyzing relevant studies. A well-designed systematic review includes clear objectives, pre-selected criteria for identifying eligible studies, an explicit methodology, a thorough and reproducible search of the literature, an assessment of the validity or risk of bias of each included study, and a systematic synthesis, analysis and presentation of the findings of the included studies. A systematic review may include a meta-analysis.

For details about carrying out systematic reviews, see the Guides and Standards section of this guide.

Is my research topic appropriate for systematic review methods?

A systematic review is best deployed to test a specific hypothesis about a healthcare or public health intervention or exposure. By focusing on a single intervention or a few specific interventions for a particular condition, the investigator can ensure a manageable results set. Moreover, examining a single or small set of related interventions, exposures, or outcomes, will simplify the assessment of studies and the synthesis of the findings.

Systematic reviews are poor tools for hypothesis generation: for instance, to determine what interventions have been used to increase the awareness and acceptability of a vaccine or to investigate the ways that predictive analytics have been used in health care management. In the first case, we don't know what interventions to search for and so have to screen all the articles about awareness and acceptability. In the second, there is no agreed on set of methods that make up predictive analytics, and health care management is far too broad. The search will necessarily be incomplete, vague and very large all at the same time. In most cases, reviews without clearly and exactly specified populations, interventions, exposures, and outcomes will produce results sets that quickly outstrip the resources of a small team and offer no consistent way to assess and synthesize findings from the studies that are identified.

If not a systematic review, then what?

You might consider performing a scoping review . This framework allows iterative searching over a reduced number of data sources and no requirement to assess individual studies for risk of bias. The framework includes built-in mechanisms to adjust the analysis as the work progresses and more is learned about the topic. A scoping review won't help you limit the number of records you'll need to screen (broad questions lead to large results sets) but may give you means of dealing with a large set of results.

This tool can help you decide what kind of review is right for your question.

Can my student complete a systematic review during her summer project?

Probably not. Systematic reviews are a lot of work. Including creating the protocol, building and running a quality search, collecting all the papers, evaluating the studies that meet the inclusion criteria and extracting and analyzing the summary data, a well done review can require dozens to hundreds of hours of work that can span several months. Moreover, a systematic review requires subject expertise, statistical support and a librarian to help design and run the search. Be aware that librarians sometimes have queues for their search time. It may take several weeks to complete and run a search. Moreover, all guidelines for carrying out systematic reviews recommend that at least two subject experts screen the studies identified in the search. The first round of screening can consume 1 hour per screener for every 100-200 records. A systematic review is a labor-intensive team effort.

How can I know if my topic has been been reviewed already?

Before starting out on a systematic review, check to see if someone has done it already. In PubMed you can use the systematic review subset to limit to a broad group of papers that is enriched for systematic reviews. You can invoke the subset by selecting if from the Article Types filters to the left of your PubMed results, or you can append AND systematic[sb] to your search. For example:

"neoadjuvant chemotherapy" AND systematic[sb]

The systematic review subset is very noisy, however. To quickly focus on systematic reviews (knowing that you may be missing some), simply search for the word systematic in the title:

"neoadjuvant chemotherapy" AND systematic[ti]

Any PRISMA-compliant systematic review will be captured by this method since including the words "systematic review" in the title is a requirement of the PRISMA checklist. Cochrane systematic reviews do not include 'systematic' in the title, however. It's worth checking the Cochrane Database of Systematic Reviews independently.

You can also search for protocols that will indicate that another group has set out on a similar project. Many investigators will register their protocols in PROSPERO , a registry of review protocols. Other published protocols as well as Cochrane Review protocols appear in the Cochrane Methodology Register, a part of the Cochrane Library .

- Next: Guides and Standards >>

- Last Updated: Sep 25, 2024 2:45 PM

- URL: https://guides.library.harvard.edu/meta-analysis

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Study designs: Part 7 – Systematic reviews

Priya ranganathan, rakesh aggarwal.

- Author information

- Article notes

- Copyright and License information

Address for correspondence: Dr. Priya Ranganathan, Department of Anaesthesiology, Tata Memorial Centre, Homi Bhabha National Institute, Mumbai, Maharashtra, India. E-mail: [email protected]

Received 2020 Apr 4; Accepted 2020 Apr 8; Issue date 2020 Apr-Jun.

This is an open access journal, and articles are distributed under the terms of the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 License, which allows others to remix, tweak, and build upon the work non-commercially, as long as appropriate credit is given and the new creations are licensed under the identical terms.

In this series on research study designs, we have so far looked at different types of primary research designs which attempt to answer a specific question. In this segment, we discuss systematic review, which is a study design used to summarize the results of several primary research studies. Systematic reviews often also use meta-analysis, which is a statistical tool to mathematically collate the results of various research studies to obtain a pooled estimate of treatment effect; this will be discussed in the next article.

Keywords: Research design, review [publication type], systematic review [publication type]

In the previous six articles in this series on study designs, we have looked at different types of primary research study designs which are used to answer research questions. In this article, we describe the systematic review, a type of secondary research design that is used to summarize the results of prior primary research studies. Systematic reviews are considered the highest level of evidence for a particular research question.[ 1 ]

SYSTEMATIC REVIEWS

As defined in the Cochrane Handbook for Systematic Reviews of Interventions , “Systematic reviews seek to collate evidence that fits pre-specified eligibility criteria in order to answer a specific research question. They aim to minimize bias by using explicit, systematic methods documented in advance with a protocol.”[ 2 ]

NARRATIVE VERSUS SYSTEMATIC REVIEWS

Review of available data has been done since times immemorial. However, the traditional narrative reviews (“expert reviews”) do not involve a systematic search of the literature. Instead, the author of the review, usually an expert on the subject, used informal methods to identify (what he or she thinks are) the key studies on the topic. The final review thus is a summary of these “selected” studies. Since studies are chosen at will (haphazardly!) and without clearly defined criteria, such reviews preferentially include those studies that favor the author's views, leading to a potential for subjectivity or selection bias.

In contrast, systematic reviews involve a formal prespecified protocol with explicit, transparent criteria for the inclusion and exclusion of studies, thereby ensuring completeness of coverage of the available evidence, and providing a more objective, replicable, and comprehensive overview it.

META-ANALYSIS

Many systematic reviews use an additional tool, known as meta-analysis, which is a statistical technique for combining the results of multiple studies in a systematic review in a mathematically appropriate way, to create a single (pooled) and more precise estimate of treatment effect. The feasibility of performing a meta-analysis in a systematic review depends on the number of studies included in the final review and the degree of heterogeneity in the inclusion criteria as well as the results between the included studies. Meta-analysis will be discussed in detail in the next article in this series.

THE PROCESS OF A SYSTEMATIC REVIEW

The conduct of a systematic review involves several sequential key steps.[ 3 , 4 ] As in other research study designs, a clearly stated research question and a well-written research protocol are essential before commencing a systematic review.

Step 1: Stating the review question

Systematic reviews can be carried out in any field of medical research, e.g. efficacy or safety of interventions, diagnostics, screening or health economics. In this article, we focus on systematic reviews of studies looking at the efficacy of interventions. As for the other study designs, for a systematic review too, the question is best framed using the Population, Intervention, Comparator, and Outcome (PICO) format.

For example, Safi et al . carried out a systematic review on the effect of beta-blockers on the outcomes of patients with myocardial infarction.[ 5 ] In this review, the Population was patients with suspected or confirmed myocardial infarction, the Intervention was beta-blocker therapy, the Comparator was either placebo or no intervention, and the Outcomes were all-cause mortality and major adverse cardiovascular events. The review question was “ In patients with suspected or confirmed myocardial infarction, does the use of beta-blockers affect mortality or major adverse cardiovascular outcomes? ”

Step 2: Listing the eligibility criteria for studies to be included

It is essential to explicitly define a priori the criteria for selection of studies which will be included in the review. Besides the PICO components, some additional criteria used frequently for this purpose include language of publication (English versus non-English), publication status (published as full paper versus unpublished), study design (randomized versus quasi-experimental), age group (adults versus children), and publication year (e.g. in the last 5 years, or since a particular date). The PICO criteria used may not be very specific, e.g. it is possible to include studies that use one or the other drug belonging to the same group. For instance, the systematic review by Safi et al . included all randomized clinical trials, irrespective of setting, blinding, publication status, publication year, or language, and reported outcomes, that had used any beta-blocker and in a broad range of doses.[ 5 ]

Step 3: Comprehensive search for studies that meet the eligibility criteria

A thorough literature search is essential to identify all articles related to the research question and to ensure that no relevant article is left out. The search may include one or more electronic databases and trial registries; in addition, it is common to hand-search the cross-references in the articles identified through such searches. One could also plan to reach out to experts in the field to identify unpublished data, and to search the grey literature non-peer-reviewednon-peer-reviewed. This last option is particularly helpful non-pharmacologic (theses, conference abstracts, and non-peer-reviewed journals). These sources are particularly helpful when the intervention is relatively new, since data on these may not yet have been published as full papers and hence are unlikely to be found in literature databases. In the review by Safi et al ., the search strategy included not only several electronic databases (Cochrane, MEDLINE, EMBASE, LILACS, etc.) but also other resources (e.g. Google Scholar, WHO International Clinical Trial Registry Platform, and reference lists of identified studies).[ 5 ] It is not essential to include all the above databases in one's search. However, it is mandatory to define in advance which of these will be searched.

Step 4: Identifying and selecting relevant studies

Once the search strategy defined in the previous step has been run to identify potentially relevant studies, a two-step process is followed. First, the titles and abstracts of the identified studies are processed to exclude any duplicates and to discard obviously irrelevant studies. In the next step, full-text papers of the remaining articles are retrieved and closely reviewed to identify studies that meet the eligibility criteria. To minimize bias, these selection steps are usually performed independently by at least two reviewers, who also assign a reason for non-selection to each discarded study. Any discrepancies are then resolved either by an independent reviewer or by mutual consensus of the original reviewers. In the Cochrane review on beta-blockers referred to above, two review authors independently screened the titles for inclusion, and then, four review authors independently reviewed the screen-positive studies to identify the trials to be included in the final review.[ 5 ] Disagreements were resolved by discussion or by taking the opinion of a separate reviewer. A summary of this selection process, showing the degree of agreement between reviewers, and a flow diagram that depicts the numbers of screened, included and excluded (with reason for exclusion) studies are often included in the final review.

Step 5: Data extraction

In this step, from each selected study, relevant data are extracted. This should be done by at least two reviewers independently, and the data then compared to identify any errors in extraction. Standard data extraction forms help in objective data extraction. The data extracted usually contain the name of the author, the year of publication, details of intervention and control treatments, and the number of participants and outcome data in each group. In the review by Safi et al ., four review authors independently extracted data and resolved any differences by discussion.[ 5 ]

Handling missing data

Some of the studies included in the review may not report outcomes in accordance with the review methodology. Such missing data can be handled in two ways – by contacting authors of the original study to obtain the necessary data and by using data imputation techniques. Safi et al . used both these approaches – they tried to get data from the trial authors; however, where that failed, they analyzed the primary outcome (mortality) using the best case (i.e. presuming that all the participants in the experimental arm with missing data had survived and those in the control arm with missing mortality data had died – representing the maximum beneficial effect of the intervention) and the worst case (all the participants with missing data in the experimental arm assumed to have died and those in the control arm to have survived – representing the least beneficial effect of the intervention) scenarios.

Evaluating the quality (or risk of bias) in the included studies

The overall quality of a systematic review depends on the quality of each of the included studies. Quality of a study is inversely proportional to the potential for bias in its design. In our previous articles on interventional study design in this series, we discussed various methods to reduce bias – such as randomization, allocation concealment, participant and assessor blinding, using objective endpoints, minimizing missing data, the use of intention-to-treat analysis, and complete reporting of all outcomes.[ 6 , 7 ] These features form the basis of the Cochrane Risk of Bias Tool (RoB 2), which is a commonly used instrument to assess the risk of bias in the studies included in a systematic review.[ 8 ] Based on this tool, one can classify each study in a review as having low risk of bias, having some concerns regarding bias, or at high risk of bias. Safi et al . used this tool to classify the included studies as having low or high risk of bias and presented these data in both tabular and graphical formats.[ 5 ]

In some reviews, the authors decide to summarize only studies with a low risk of bias and to exclude those with a high risk of bias. Alternatively, some authors undertake a separate analysis of studies with low risk of bias, besides an analysis of all the studies taken together. The conclusions from such analyses of only high-quality studies may be more robust.

Step 6: Synthesis of results

The data extracted from various studies are pooled quantitatively (known as a meta-analysis) or qualitatively (if pooling of results is not considered feasible). For qualitative reviews, data are usually presented in the tabular format, showing the characteristics of each included study, to allow for easier interpretation.

Sensitivity analyses

Sensitivity analyses are used to test the robustness of the results of a systematic review by examining the impact of excluding or including studies with certain characteristics. As referred to above, this can be based on the risk of bias (methodological quality), studies with a specific study design, studies with a certain dosage or schedule, or sample size. If results of these different analyses are more-or-less the same, one can be more certain of the validity of the findings of the review. Furthermore, such analyses can help identify whether the effect of the intervention could vary across different levels of another factor. In the beta-blocker review, sensitivity analysis was performed depending on the risk of bias of included studies.[ 5 ]

IMPORTANT RESOURCES FOR CARRYING OUT SYSTEMATIC REVIEWS AND META-ANALYSES

Cochrane is an organization that works to produce good-quality, updated systematic reviews related to human healthcare and policy, which are accessible to people across the world.[ 9 ] There are more than 7000 Cochrane reviews on various topics. One of its main resources is the Cochrane Library (available at https://www.cochranelibrary.com/ ), which incorporates several databases with different types of high-quality evidence to inform healthcare decisions, including the Cochrane Database of Systematic Reviews, Cochrane Central Register of Controlled Trials (CENTRAL), and Cochrane Clinical Answers.

The Cochrane Handbook for Systematic Reviews of Interventions

The Cochrane handbook is an official guide, prepared by the Cochrane Collaboration, to the process of preparing and maintaining Cochrane systematic reviews.[ 10 ]

Review Manager software

Review Manager (RevMan) is a software developed by Cochrane to support the preparation and maintenance of systematic reviews, including tools for performing meta-analysis.[ 11 ] It is freely available in both online (RevMan Web) and offline (RevMan 5.3) versions.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses statement

The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement is an evidence-based minimum set of items for reporting of systematic reviews and meta-analyses of randomized trials.[ 12 ] It can be used both by authors of such studies to improve the completeness of reporting and by reviewers and readers to critically appraise a systematic review. There are several extensions to the PRISMA statement for specific types of reviews. An update is currently underway.

Meta-analysis of Observational Studies in Epidemiology statement

The Meta-analysis of Observational Studies in Epidemiology statement summarizes the recommendations for reporting of meta-analyses in epidemiology.[ 13 ]

PROSPERO is an international database for prospective registration of protocols for systematic reviews in healthcare.[ 14 ] It aims to avoid duplication of and to improve transparency in reporting of results of such reviews.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

- 1. Centre for Evidence Based Medicine. [Last accessed on 2020 Apr 01]. Available from: http://wwwcebmnet .

- 2. Chandler J, Cumpston M, Thomas J, Higgins JP, Deeks JJ, Clarke MJ. Higgins JP, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, et al., editors. Introduction. Cochrane Handbook for Systematic Reviews of Interventions Version 60 (updated August 2019) Ch 1 Cochrane. 2019. Available from: http://wwwtrainingcochraneorg/handbook .

- 3. Impellizzeri FM, Bizzini M. Systematic review and meta-analysis: A primer. Int J Sports Phys Ther. 2012;7:493–503. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 4. Akobeng AK. Understanding systematic reviews and meta-analysis. Arch Dis Child. 2005;90:845–8. doi: 10.1136/adc.2004.058230. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 5. Safi S, Sethi NJ, Nielsen EE, Feinberg J, Jakobsen JC, Gluud C. Beta-blockers for suspected or diagnosed acute myocardial infarction. Cochrane Database Syst Rev. 2019;12:CD012484. doi: 10.1002/14651858.CD012484.pub2. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 6. Aggarwal R, Ranganathan P. Study designs: Part 5 – Interventional studies (II) Perspect Clin Res. 2019;10:183–6. doi: 10.4103/picr.PICR_138_19. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 7. Ranganathan P, Aggarwal R. Study designs: Part 6 – Interventional studies (III) Perspect Clin Res. 2020;11:47–50. doi: 10.4103/picr.PICR_209_19. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 8. RoB 2: A Revised Cochrane Risk-Of-Bias Tool for Randomized Trials. [Last accessed on 2020 Apr 01]. Available from: https://methodscochraneorg/bias/resources/rob-2-revised-cochrane-risk-bias-tool-randomized-trials .

- 9. Cochrane. [Last accessed on 2020 Apr 03]. Available from: https://workcochraneorg/organisation-known-cochrane .

- 10. The Cochrane Handbook for Systematic Reviews of Interventions. [Last accessed on 2020 Apr 01]. Available from: https://cochrane-handbookorg .

- 11. Cochrane RevMan. [Last accessed on 2020 Apr 01]. Available from: https://trainingcochraneorg/online-learning/core-software-cochrane-reviews/revman .

- 12. PRISMA Statement. [Last accessed on 2020 Apr 01]. Available from: http://wwwprisma-statementorg/

- 13. Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, Rennie D, et al. Meta-analysis of observational studies in epidemiology: A proposal for reporting. Meta-analysis of Observational Studies in Epidemiology (MOOSE) group. JAMA. 2000;283:2008–12. doi: 10.1001/jama.283.15.2008. [ DOI ] [ PubMed ] [ Google Scholar ]

- 14. PROSPERO: International Prospective Register of Systematic Reviews. [Last accessed on 2020 Apr 01]. Available from: https://wwwcrdyorkacuk/PROSPERO/

- View on publisher site

- PDF (485.5 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

Easy guide to conducting a systematic review

Affiliations.

- 1 Discipline of Child and Adolescent Health, University of Sydney, Sydney, New South Wales, Australia.

- 2 Department of Nephrology, The Children's Hospital at Westmead, Sydney, New South Wales, Australia.

- 3 Education Department, The Children's Hospital at Westmead, Sydney, New South Wales, Australia.

- PMID: 32364273

- DOI: 10.1111/jpc.14853

A systematic review is a type of study that synthesises research that has been conducted on a particular topic. Systematic reviews are considered to provide the highest level of evidence on the hierarchy of evidence pyramid. Systematic reviews are conducted following rigorous research methodology. To minimise bias, systematic reviews utilise a predefined search strategy to identify and appraise all available published literature on a specific topic. The meticulous nature of the systematic review research methodology differentiates a systematic review from a narrative review (literature review or authoritative review). This paper provides a brief step by step summary of how to conduct a systematic review, which may be of interest for clinicians and researchers.

Keywords: research; research design; systematic review.

© 2020 Paediatrics and Child Health Division (The Royal Australasian College of Physicians).

Publication types

- Systematic Review

- Research Design*

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Systematic Review | Definition, Examples & Guide

Systematic Review | Definition, Examples & Guide

Published on 15 June 2022 by Shaun Turney . Revised on 18 July 2024.

A systematic review is a type of review that uses repeatable methods to find, select, and synthesise all available evidence. It answers a clearly formulated research question and explicitly states the methods used to arrive at the answer.

They answered the question ‘What is the effectiveness of probiotics in reducing eczema symptoms and improving quality of life in patients with eczema?’

In this context, a probiotic is a health product that contains live microorganisms and is taken by mouth. Eczema is a common skin condition that causes red, itchy skin.

Table of contents

What is a systematic review, systematic review vs meta-analysis, systematic review vs literature review, systematic review vs scoping review, when to conduct a systematic review, pros and cons of systematic reviews, step-by-step example of a systematic review, frequently asked questions about systematic reviews.

A review is an overview of the research that’s already been completed on a topic.

What makes a systematic review different from other types of reviews is that the research methods are designed to reduce research bias . The methods are repeatable , and the approach is formal and systematic:

- Formulate a research question

- Develop a protocol

- Search for all relevant studies

- Apply the selection criteria

- Extract the data

- Synthesise the data

- Write and publish a report

Although multiple sets of guidelines exist, the Cochrane Handbook for Systematic Reviews is among the most widely used. It provides detailed guidelines on how to complete each step of the systematic review process.

Systematic reviews are most commonly used in medical and public health research, but they can also be found in other disciplines.

Systematic reviews typically answer their research question by synthesising all available evidence and evaluating the quality of the evidence. Synthesising means bringing together different information to tell a single, cohesive story. The synthesis can be narrative ( qualitative ), quantitative , or both.

Prevent plagiarism, run a free check.

Systematic reviews often quantitatively synthesise the evidence using a meta-analysis . A meta-analysis is a statistical analysis, not a type of review.

A meta-analysis is a technique to synthesise results from multiple studies. It’s a statistical analysis that combines the results of two or more studies, usually to estimate an effect size .

A literature review is a type of review that uses a less systematic and formal approach than a systematic review. Typically, an expert in a topic will qualitatively summarise and evaluate previous work, without using a formal, explicit method.

Although literature reviews are often less time-consuming and can be insightful or helpful, they have a higher risk of bias and are less transparent than systematic reviews.

Similar to a systematic review, a scoping review is a type of review that tries to minimise bias by using transparent and repeatable methods.

However, a scoping review isn’t a type of systematic review. The most important difference is the goal: rather than answering a specific question, a scoping review explores a topic. The researcher tries to identify the main concepts, theories, and evidence, as well as gaps in the current research.

Sometimes scoping reviews are an exploratory preparation step for a systematic review, and sometimes they are a standalone project.

A systematic review is a good choice of review if you want to answer a question about the effectiveness of an intervention , such as a medical treatment.

To conduct a systematic review, you’ll need the following:

- A precise question , usually about the effectiveness of an intervention. The question needs to be about a topic that’s previously been studied by multiple researchers. If there’s no previous research, there’s nothing to review.

- If you’re doing a systematic review on your own (e.g., for a research paper or thesis), you should take appropriate measures to ensure the validity and reliability of your research.

- Access to databases and journal archives. Often, your educational institution provides you with access.

- Time. A professional systematic review is a time-consuming process: it will take the lead author about six months of full-time work. If you’re a student, you should narrow the scope of your systematic review and stick to a tight schedule.

- Bibliographic, word-processing, spreadsheet, and statistical software . For example, you could use EndNote, Microsoft Word, Excel, and SPSS.

A systematic review has many pros .

- They minimise research b ias by considering all available evidence and evaluating each study for bias.

- Their methods are transparent , so they can be scrutinised by others.

- They’re thorough : they summarise all available evidence.

- They can be replicated and updated by others.

Systematic reviews also have a few cons .

- They’re time-consuming .

- They’re narrow in scope : they only answer the precise research question.

The 7 steps for conducting a systematic review are explained with an example.

Step 1: Formulate a research question

Formulating the research question is probably the most important step of a systematic review. A clear research question will:

- Allow you to more effectively communicate your research to other researchers and practitioners

- Guide your decisions as you plan and conduct your systematic review

A good research question for a systematic review has four components, which you can remember with the acronym PICO :

- Population(s) or problem(s)

- Intervention(s)

- Comparison(s)

You can rearrange these four components to write your research question:

- What is the effectiveness of I versus C for O in P ?

Sometimes, you may want to include a fourth component, the type of study design . In this case, the acronym is PICOT .

- Type of study design(s)

- The population of patients with eczema

- The intervention of probiotics

- In comparison to no treatment, placebo , or non-probiotic treatment

- The outcome of changes in participant-, parent-, and doctor-rated symptoms of eczema and quality of life

- Randomised control trials, a type of study design

Their research question was:

- What is the effectiveness of probiotics versus no treatment, a placebo, or a non-probiotic treatment for reducing eczema symptoms and improving quality of life in patients with eczema?

Step 2: Develop a protocol

A protocol is a document that contains your research plan for the systematic review. This is an important step because having a plan allows you to work more efficiently and reduces bias.

Your protocol should include the following components:

- Background information : Provide the context of the research question, including why it’s important.

- Research objective(s) : Rephrase your research question as an objective.

- Selection criteria: State how you’ll decide which studies to include or exclude from your review.

- Search strategy: Discuss your plan for finding studies.

- Analysis: Explain what information you’ll collect from the studies and how you’ll synthesise the data.

If you’re a professional seeking to publish your review, it’s a good idea to bring together an advisory committee . This is a group of about six people who have experience in the topic you’re researching. They can help you make decisions about your protocol.

It’s highly recommended to register your protocol. Registering your protocol means submitting it to a database such as PROSPERO or ClinicalTrials.gov .

Step 3: Search for all relevant studies

Searching for relevant studies is the most time-consuming step of a systematic review.

To reduce bias, it’s important to search for relevant studies very thoroughly. Your strategy will depend on your field and your research question, but sources generally fall into these four categories:

- Databases: Search multiple databases of peer-reviewed literature, such as PubMed or Scopus . Think carefully about how to phrase your search terms and include multiple synonyms of each word. Use Boolean operators if relevant.

- Handsearching: In addition to searching the primary sources using databases, you’ll also need to search manually. One strategy is to scan relevant journals or conference proceedings. Another strategy is to scan the reference lists of relevant studies.

- Grey literature: Grey literature includes documents produced by governments, universities, and other institutions that aren’t published by traditional publishers. Graduate student theses are an important type of grey literature, which you can search using the Networked Digital Library of Theses and Dissertations (NDLTD) . In medicine, clinical trial registries are another important type of grey literature.

- Experts: Contact experts in the field to ask if they have unpublished studies that should be included in your review.

At this stage of your review, you won’t read the articles yet. Simply save any potentially relevant citations using bibliographic software, such as Scribbr’s APA or MLA Generator .

- Databases: EMBASE, PsycINFO, AMED, LILACS, and ISI Web of Science

- Handsearch: Conference proceedings and reference lists of articles

- Grey literature: The Cochrane Library, the metaRegister of Controlled Trials, and the Ongoing Skin Trials Register

- Experts: Authors of unpublished registered trials, pharmaceutical companies, and manufacturers of probiotics

Step 4: Apply the selection criteria

Applying the selection criteria is a three-person job. Two of you will independently read the studies and decide which to include in your review based on the selection criteria you established in your protocol . The third person’s job is to break any ties.

To increase inter-rater reliability , ensure that everyone thoroughly understands the selection criteria before you begin.